Explainable Vision AI: Opening the Black Box for Compliance

Introduction — Why Transparency Is Now a Board-Level Mandate

In today’s business landscape, AI is no longer just a technology decision — it’s a governance issue. From automated quality inspections on manufacturing lines to identity verification in financial services and brand visibility tracking in retail, computer vision systems are making decisions that directly impact operations, customers, and legal standing.The challenge? Many of these AI systems function as black boxes — highly accurate, yet difficult to interpret or audit.

This opacity is no longer acceptable.

Regulators worldwide are stepping in with new rules that require companies to explain how AI-driven decisions are made. The EU AI Act, for example, mandates that high-risk AI applications — such as facial recognition, surveillance, and biometric verification — must be transparent, traceable, and accountable. In the U.S., Executive Order 14110 outlines a national framework for safe and trustworthy AI, pushing businesses to adopt more explainable, fair, and auditable systems. And it doesn’t stop at government: industry regulators, investors, and even insurers now expect companies to demonstrate how their AI models work and why their outputs are reliable.

For C-level executives, this shift represents both a risk and an opportunity. On the one hand, a lack of explainability can lead to compliance failures, reputational damage, and stalled deployments. On the other hand, companies that embrace explainable AI gain strategic advantages — faster internal approval cycles, smoother regulatory audits, and increased stakeholder trust. Transparency builds resilience.

In this context, vision AI — often used for image classification, object detection, OCR, and content moderation — must evolve beyond just performance metrics. It needs to be auditable, interpretable, and defensible. That’s why many leading firms are reassessing their AI infrastructure to prioritize not only accuracy, but also explainability as a core business requirement.

In the sections that follow, we’ll explore the regulatory trends, technical challenges, and emerging strategies that can help executives unlock value from vision AI while ensuring it meets growing transparency demands.

The Compliance Landscape Shaping Vision AI

As artificial intelligence moves from experimental labs into critical business functions, governments and regulatory bodies around the world are redefining the rules of engagement. For organizations leveraging computer vision — whether for document verification, workplace safety, content filtering, or quality control — compliance is becoming a board-level concern. Staying ahead requires a clear understanding of the evolving legal and ethical frameworks.

✅ The EU AI Act: A Global Benchmark

The EU Artificial Intelligence Act is the world’s first comprehensive regulatory framework for AI. It classifies AI systems into four risk categories, with “high-risk” systems facing strict requirements around transparency, documentation, human oversight, and explainability.

Computer vision applications often fall into this high-risk tier — particularly when used for:

Biometric identification (e.g., face recognition in public spaces)

Safety-critical inspections (e.g., industrial defect detection)

Access control (e.g., visual ID checks)

Emotion recognition and behavioral analysis

Organizations deploying such systems must document how AI decisions are made, ensure human oversight is possible, and provide clear explanations upon request. Non-compliance can lead to fines up to €35 million or 7% of global revenue.

🇺🇸 U.S. Executive Order 14110: A Call for Trustworthy AI

In the United States, Executive Order 14110, issued in 2023, emphasizes the development and deployment of “safe, secure, and trustworthy AI.” While not yet codified into law, it signals strong federal expectations for:

Auditable AI pipelines

Bias and risk assessments

Transparent decision-making

Robust public-private governance mechanisms

This directive has prompted regulatory agencies such as the FTC, FDA, and FAA to begin updating their guidelines for AI-related products and services — including those that rely on vision-based automation.

📜 Sector-Specific Standards and Expectations

Even outside of broad AI legislation, several industries face their own domain-specific compliance pressures:

Finance: FINRA and the SEC are scrutinizing AI-driven surveillance and fraud-detection systems.

Healthcare: The FDA is actively developing guidance for AI-based diagnostic imaging tools.

Retail & Advertising: Consumer protection agencies are reviewing the use of AI in marketing, visual analytics, and brand monitoring.

Furthermore, emerging standards such as ISO/IEC 42001 (AI Management Systems) and ISO 24029-2 (Transparency and Explainability of AI) are laying the groundwork for structured, international approaches to AI governance.

📌 What "Explainability" Means in Practice

From a compliance perspective, “explainability” isn’t just a technical feature — it’s a legal and ethical obligation. For vision AI, this includes:

Being able to show which visual features influenced a decision (e.g., bounding boxes, heatmaps)

Producing audit trails for each inference

Allowing humans to review and challenge outputs

Providing end-users with meaningful information, especially in consumer-facing contexts

For instance, if a facial recognition system denies access to an individual, regulations may require that the company explain why the match failed, how the system was trained, and what recourse the user has.

For C-level leaders, the message is clear: vision AI cannot be a black box. Legal, compliance, and risk teams must be empowered with tools and strategies to evaluate the behavior of AI systems, ensure traceability, and respond confidently to audits or public inquiries. The cost of ignoring this shift is rising — not just in regulatory penalties, but in brand trust and operational risk.

Why Vision Models Feel Like Black Boxes

For many executives, AI still carries an air of mystique. While the technology can deliver powerful results — detecting defects, recognizing logos, verifying identities — it often does so through processes that are difficult to explain, trace, or audit. This lack of interpretability is especially acute in computer vision systems powered by deep learning, where even developers can struggle to articulate how a specific prediction was made.

🧠 Inside the “Black Box”: How Vision AI Makes Decisions

Most modern vision AI solutions are built on deep neural networks, such as Convolutional Neural Networks (CNNs)or Vision Transformers (ViTs). These models excel at identifying patterns in visual data — such as faces, product labels, or safety violations — but they do so by processing millions of numerical parameters across dozens or even hundreds of layers.

Rather than following a transparent logic tree, these models learn statistical correlations between image features and output labels based on large volumes of training data. As a result:

The model may recognize a wine label not by its logo, but by unrelated background elements in training images.

An NSFW classifier might flag a photo based on subtle skin-tone patterns, missing the broader context.

This internal reasoning process is invisible to users and stakeholders, which becomes a serious problem when compliance, fairness, or public accountability are required.

⚠️ Hidden Risks of Non-Transparent Vision AI

The black-box nature of vision AI introduces several business-critical risks:

Spurious correlations: A defect detection model may associate flaws with lighting conditions rather than actual product faults — leading to false positives or missed issues.

Data bias: If training data underrepresents certain demographics or product variations, the model may perform poorly in real-world scenarios — potentially leading to discriminatory outcomes or safety hazards.

Concept drift: Over time, as environments change (e.g., new packaging, updated uniforms, different lighting), the model’s accuracy can degrade silently without explanation.

Audit failure: Without visual explanations or logs, it becomes extremely difficult to answer questions from regulators, partners, or customers about why a system made a certain decision.

🔍 Real-World Example: Misleading Attention in Logo Recognition

Imagine a brand visibility analytics tool used to track logo exposure in live sports broadcasts. The model returns a high “visibility score” for a scene where the brand is supposedly prominent. However, upon closer inspection, the AI was actually triggered by a similar shape in the stadium background, not the brand mark itself.

Without a clear explanation mechanism (such as heatmaps showing model attention), this error may go unnoticed — compromising marketing analytics, budget allocation, and contractual reporting.

For C-level decision-makers, the lesson is clear: without explainability, vision AI operates on blind trust. This undermines confidence, exposes the organization to regulatory and reputational risk, and limits the ability to scale AI responsibly across departments. The following sections will outline how to lift the lid on the black box — transforming opaque image analysis into transparent, auditable, and compliant systems.

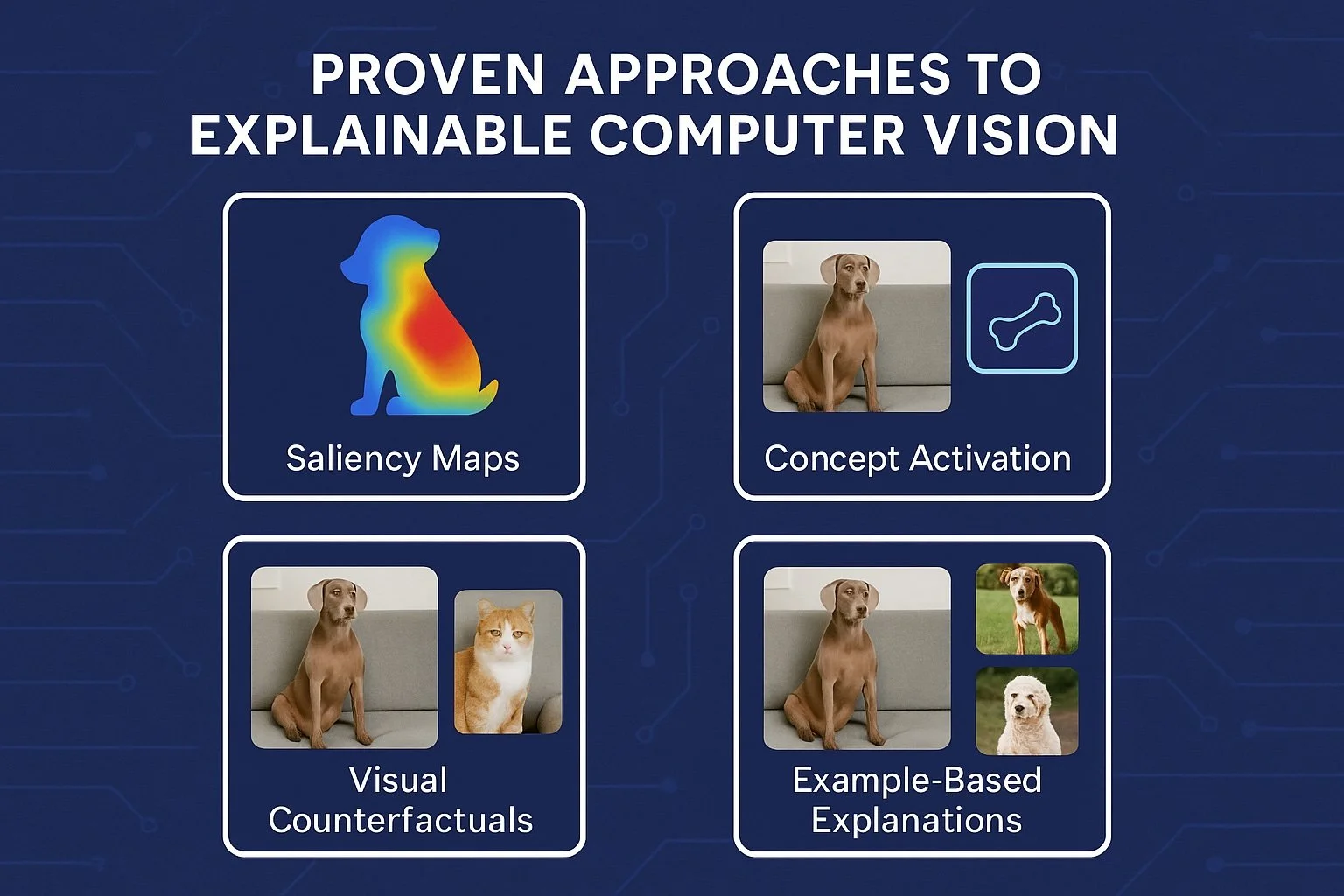

Proven Approaches to Explainable Computer Vision

The good news for business leaders is that explainability in AI is no longer a theoretical goal — it’s a practical reality.Over the last several years, the AI research community and enterprise technology vendors have developed a range of tools and frameworks that can make vision models more transparent and accountable. These methods can be implemented without sacrificing model performance or delaying deployment timelines.

Let’s explore the most effective approaches that allow organizations to lift the veil on complex image-processing models.

🔍 1. Local Explanations: Visualizing Individual Decisions

Local explanation methods focus on answering a simple but critical question:

Why did the model make this particular prediction?

In computer vision, these techniques usually highlight the specific regions of an image that most influenced the model’s decision. Common techniques include:

Grad-CAM (Gradient-weighted Class Activation Mapping): Produces heatmaps that overlay on the original image, showing where the model “looked” when making a prediction.

Integrated Gradients: Assigns importance scores to each pixel, helping interpret fine-grained decisions, such as document OCR or label recognition.

SHAP (Shapley Additive Explanations) for vision: Though more commonly used in tabular data, it can be adapted to visualize feature contributions in image classification.

For example, when using an Alcohol Label Recognition API, Grad-CAM can confirm that the model correctly identified the brand by focusing on the product logo — not irrelevant design elements.

These techniques are especially useful during compliance audits or incident reviews, as they provide clear, visual evidence of how AI is making decisions.

📊 2. Global Explanations: Understanding Model Behavior at Scale

While local methods help explain individual outputs, global explainability helps executives and data science teams understand how the model behaves across the entire dataset or population.

Key strategies include:

Feature importance mapping: Identifies which image characteristics (e.g., shape, color, texture) the model consistently relies on.

Concept activation vectors (CAVs): Enables models to reason in terms of human-understandable concepts, like “has a barcode” or “contains a face.”

Surrogate models: Simpler models (like decision trees) that approximate the behavior of a complex neural network to reveal decision logic in a more interpretable form.

These methods are crucial when documenting model behavior for regulators, investors, or internal compliance teams.

🤝 3. Human-in-the-Loop Systems: Blending Automation with Oversight

In many regulated environments, full automation is not an option. Instead, the trend is toward “human-in-the-loop” (HITL) systems that combine the speed of AI with the judgment of human reviewers.

Examples:

A Face Detection and Recognition API flags potential mismatches during a KYC process, which are then verified by a compliance officer.

An Image Anonymization API identifies sensitive areas (like faces or license plates), but a privacy team reviews them before final redaction.

This collaborative model not only reduces error rates but also helps satisfy legal requirements for human oversight, particularly in high-risk or high-impact applications.

🛠️ 4. MLOps Integration: Explainability as a Technical Discipline

Explainability cannot be treated as a one-off feature. It must be built into the model lifecycle, from training to deployment and monitoring. This is where MLOps (Machine Learning Operations) practices come into play.

Core requirements include:

Version-controlled training data and models

Experiment tracking systems that log hyperparameters, performance, and outputs

Automated generation of audit logs, including explanations tied to each model inference

Monitoring tools that detect when model explanations begin to shift due to data drift

By embedding explainability into their MLOps pipelines, organizations can scale vision AI responsibly, while maintaining full traceability and compliance readiness.

For C-level executives, these techniques offer practical pathways to de-risk AI investments and strengthen regulatory confidence. Whether through quick wins with explainable APIs or more advanced custom solutions, opening the black box is no longer optional — it’s a strategic enabler.

Performance vs. Transparency — Finding the Sweet Spot

One of the most common concerns executives face when adopting explainable AI is the perceived trade-off between performance and transparency. In the race to maximize accuracy and automation, it’s tempting to prioritize models that deliver results — regardless of how they work internally. But in the current regulatory and risk landscape, this mindset is increasingly unsustainable.

The reality is that with the right strategy, organizations can balance model performance with explainability — without significantly compromising either. In fact, enhanced transparency often leads to better operational outcomes by improving trust, enabling faster incident response, and reducing costly errors.

⚖️ Quantifying the Trade-Off

Modern deep learning models, particularly in computer vision, are often optimized for precision — whether it's classifying product images, detecting NSFW content, or identifying vehicles in a scene. These models can exceed human-level accuracy, but the more complex they become, the harder they are to explain.

While explainability layers may slightly reduce raw performance (e.g., 1–3% drop in accuracy), the risk-adjusted ROI can be far greater:

Faster regulatory approvals due to documented decision logic

Lower reputational exposure in the event of a public complaint

Shorter internal review cycles as legal and compliance teams gain confidence in system behavior

In many cases, the “performance loss” is negligible compared to the cost of non-compliance or unexplainable false positives.

🧩 Model Architectures Designed for Interpretability

A growing number of model designs are being developed with explainability in mind from the outset. These include:

Prototype-based networks: These models compare input images against reference prototypes (e.g., a known wine label or industrial defect), making the decision-making process more interpretable for reviewers.

Attention-based architectures: Such models (like Vision Transformers) naturally generate attention maps, which show which image regions contributed most to the output — helpful in justifying results to regulators or partners.

Hybrid rule-AI systems: These combine neural networks with human-defined rules, allowing for greater oversight in safety-critical use cases such as manufacturing defect detection or content moderation.

Choosing the right architecture early in development can significantly reduce explainability challenges down the line.

🧠 Wrapping Black-Box Models with Explanation Layers

In some scenarios — particularly when working with third-party APIs or legacy models — rebuilding a model from scratch isn’t feasible. In these cases, companies can use post-hoc explanation techniques to interpret outputs from otherwise opaque models.

For example:

A Background Removal API used in e-commerce image prep may be wrapped with heatmap visualization tools to verify that the object of interest (e.g., a chair or sneaker) is the focus of the segmentation, rather than the background clutter.

A Logo Recognition API can be paired with bounding-box validation tools to ensure that model confidence scores align with visually relevant areas of the image.

These methods offer a practical way to retrofit explainability into existing systems while maintaining deployment speed and minimizing costs.

🏪 Real-World Example: Retail Use Case

A national food retail chain needed to ensure that allergen labels on private-label products were consistently recognized by an automated quality control system. Accuracy was critical, but so was regulatory compliance with food safety laws.

The solution combined a production-grade Object Detection API with Grad-CAM visualizations for every inference. If a model flagged a label as missing or incorrect, auditors could instantly review a heatmap showing whether the prediction was based on the actual allergen icon — or something irrelevant.

The result:

30% reduction in audit time

Fewer product recalls

Increased buyer confidence across the supply chain

For C-level executives, the takeaway is simple: Explainability is not the enemy of performance — it’s a performance multiplier. By integrating transparent models or enhancing opaque ones with interpretability tools, organizations can achieve high accuracy and maintain the trust and control necessary for long-term success.

Implementation Playbook for Executives

Bringing explainable vision AI into your organization doesn’t require reinventing your entire tech stack. With a clear roadmap, executives can introduce transparency step-by-step — starting with high-risk use cases, leveraging proven tools, and integrating compliance into every layer of the AI lifecycle. The key is to align technical decisions with strategic business outcomes: reduced legal exposure, increased operational trust, and faster time-to-value.

Here is a practical implementation playbook tailored for C-level leadership:

🧭 Step 1: Map High-Risk Vision AI Use Cases

Begin by identifying where AI-driven image analysis intersects with compliance, safety, or customer experience.Focus on areas where explainability isn’t just a benefit — it’s a necessity.

Common high-risk scenarios include:

KYC and biometric verification (e.g., facial recognition, ID checks)

Workplace safety and visual surveillance

Medical image analysis or diagnostic triage

Brand and logo monitoring for contract reporting

User-generated content filtering (e.g., NSFW detection)

Each of these domains may fall under evolving regulatory oversight and require the ability to justify AI decisions to regulators, partners, or end users.

🧰 Step 2: Choose the Right Tools for Explainability

Executives don’t need to start from scratch. Many ready-to-use APIs already support transparency features, while custom solutions offer tailored control over explainability components.

Quick wins with production-grade APIs:

Image Anonymization API – Provides bounding boxes of sensitive regions (e.g., faces, license plates) that are easy to audit.

Brand Mark & Logo Recognition API – Includes detection metadata that can be tied to heatmaps for validation.

NSFW Recognition API – Supports content scoring with confidence thresholds and categorical breakdowns.

Strategic investment in custom vision solutions:

Tailored to business-specific risks and performance goals

Enable integration of transparency features from the design phase (e.g., model cards, concept tracking, attention overlays)

Delivered with compliance-aligned documentation for long-term governance

By selecting the right building blocks early on, companies can reduce complexity, enhance speed-to-compliance, and control operational costs.

📋 Step 3: Establish Governance Frameworks

Explainability must be embedded in governance processes — not just the model code. Build policies and controls that enable repeatable, auditable AI development.

Include:

Model cards for every deployed model, documenting training data, intended use, limitations, and known risks.

Data lineage tracking to show how datasets evolve and feed into predictions.

Bias and fairness testing procedures to ensure regulatory alignment.

Audit-ready logs for inference history, visual explanations, and human overrides.

These governance assets not only support regulatory compliance but also increase internal stakeholder confidence in deploying AI across departments.

💰 Step 4: Define ROI in Terms of Risk Reduction and Efficiency Gains

Explainable AI isn’t just about meeting legal requirements — it can drive measurable business outcomes:

Reduced audit and certification costs due to better documentation

Fewer false positives in quality control or fraud detection, leading to operational savings

Faster onboarding of enterprise clients in regulated industries (finance, healthcare, retail)

Enhanced brand reputation through transparent AI practices and consumer trust

When positioned correctly, investments in explainability contribute directly to cost optimization, revenue growth, and risk mitigation.

🚀 Step 5: Accelerate Time-to-Value with a Phased Rollout

Rather than launching a full-scale initiative, start with a focused pilot project.

Example timeline:

Day 0–30: Identify one high-impact use case (e.g., identity verification), implement an off-the-shelf vision API with visual explanation tools, and assess baseline performance.

Day 31–90: Expand the solution with governance features (model cards, audit logs), involve compliance/legal teams, and refine deployment workflows.

Day 90+: Scale horizontally to other departments or regions, incorporating custom-built AI modules where deeper control or competitive differentiation is required.

This phased approach ensures low-risk adoption, tangible results, and early internal buy-in.

For C-level leaders, this playbook offers a clear and executable path to embed explainability into computer vision pipelines. By combining plug-and-play APIs with tailored development and strong governance, organizations can future-proof their AI investments — and turn compliance into a strategic advantage.

Conclusion — Turning Transparent Vision AI into Competitive Advantage

As artificial intelligence becomes deeply embedded in enterprise operations, transparency is no longer optional — it’s a core business requirement. Regulatory frameworks like the EU AI Act and U.S. federal directives are reshaping expectations around how AI systems must behave, especially in high-impact domains like facial recognition, identity verification, content moderation, and quality control.

For computer vision in particular, the stakes are rising. These systems are often deployed in scenarios where human safety, customer trust, legal compliance, and brand reputation are on the line. Relying on black-box models with opaque decision-making processes introduces unacceptable levels of operational and reputational risk.

But there is a clear path forward.

Executives who proactively embrace explainable vision AI are not just “checking a box” — they are unlocking competitive advantages across the business:

Stronger compliance readiness that reduces the risk of fines, audit delays, or legal disputes

Faster deployment cycles as internal stakeholders gain trust in model behavior

Improved collaboration between technical, legal, and operational teams through shared visibility

Enhanced customer transparency that builds brand credibility and loyalty

Moreover, explainability enables organizations to learn from their AI systems, identifying edge cases, correcting biases, and fine-tuning models based on real-world performance. This continuous feedback loop improves not only compliance — but also accuracy, efficiency, and long-term ROI.

By combining production-ready APIs (such as object detection, anonymization, OCR, or content moderation) with custom-built, explainable solutions, enterprises can scale their AI initiatives confidently and responsibly. Transparent vision AI becomes more than a compliance strategy — it becomes a foundation for trustworthy, scalable innovation.

The call to action for C-level leaders is clear:

Audit your current vision AI deployments. Identify where explainability matters most. Start small if needed — but start now. In a world where scrutiny is increasing and trust is currency, explainability is not just a feature.

It’s your edge.