OCR for Arabic & Cyrillic Scripts: Multilingual Tactics

Introduction — Why RTL & Cyrillic-Friendly OCR Matters

Emerging Market Boom

The digital landscape is expanding rapidly in emerging markets, with regions like the Middle East, North Africa (MENA), Central Asia and Eastern Europe seeing unprecedented growth in smartphone adoption and internet connectivity. According to recent market insights, the MENA region alone is expected to surpass 500 million internet users by 2026. This surge in digital users opens massive opportunities for apps and platforms that cater to local languages — specifically, those written in Arabic and Cyrillic scripts.

For developers, this growing market represents a goldmine of potential users. But building inclusive applications that truly resonate with these audiences means supporting the languages they use every day. And that’s where the challenge begins.

The Limitations of Latin-Centric OCR

Most Optical Character Recognition (OCR) technologies today are optimized primarily for Latin-based scripts — like English, Spanish and French. This design choice is rooted in the historical dominance of Latin scripts in global software development. Unfortunately, this leaves Arabic, Cyrillic and other non-Latin scripts with subpar recognition accuracy.

Arabic is written from right to left (RTL), with letters that change shape based on their position in a word. In addition, it features complex ligatures — combinations of characters that join together seamlessly. Cyrillic, used in languages like Russian, Ukrainian and Serbian, is written from left to right (LTR) but introduces its own set of complexities. Many Cyrillic characters resemble Latin characters, leading to frequent misrecognition during OCR processing. For example, the Cyrillic letter "В" (which sounds like "V") is often mistaken for the Latin "B" and "С" is confused with "C".

Bridging the Gap with Intelligent OCR

To truly unlock the potential of these emerging markets, developers need OCR solutions that can handle:

Bidirectional text flows — smoothly transitioning between RTL and LTR when Arabic or Hebrew is mixed with numerals or Latin text.

Ligature handling — understanding when characters join together and when they stand alone.

Script switching — seamlessly recognizing both Arabic and Cyrillic characters within the same document.

Advanced OCR engines are now being designed with these capabilities, making it possible for developers to deliver accurate, multilingual text extraction. By addressing the specific challenges of RTL and Cyrillic scripts, these solutions are paving the way for more inclusive applications in MENA, Eastern Europe and beyond.

A Growing Need for Localized Applications

Banking apps, government e-services, digital marketplaces and social platforms all require smooth text extraction from Arabic and Cyrillic documents. Think about scanning passports, invoices, business cards and handwritten notes. Users expect apps to handle these tasks with the same accuracy and speed as they would for English or French. Failing to meet that expectation not only frustrates users but also limits the app's ability to scale in these regions.

To meet this growing demand, developers must go beyond standard OCR and adopt technologies specifically optimized for complex scripts. This involves not just recognizing characters but understanding how they flow, connect and appear in real-world documents.

In the next sections, we’ll explore how to build or integrate OCR solutions that excel in these multilingual environments — ensuring that your application is ready to thrive in emerging markets. We’ll dive into the challenges of script anatomy, best practices for image preprocessing and the role of language models in post-processing. Whether you are developing a mobile app or a cloud-based solution, mastering these tactics can transform how your product performs in MENA and Eastern Europe.

Script Anatomy & OCR Challenges

Understanding the unique structures and writing behaviors of Arabic and Cyrillic scripts is crucial for building effective OCR solutions. These scripts are fundamentally different from Latin-based languages, not only in visual representation but also in how characters interact with one another. In this section, we will explore the key structural elements that make OCR for these languages challenging and the strategies to overcome them.

Bidirectionality vs Unidirectionality

One of the most distinctive features of Arabic script is its bidirectional nature. Arabic, along with Hebrew, is written from right to left (RTL), while numbers within the text are written from left to right (LTR). This dual directionality poses a significant challenge for traditional OCR systems that are optimized for purely left-to-right (LTR) languages like English or French.

Example:

In an Arabic invoice, you might see a line like this:

فاتورة رقم 12345

The text "فاتورة رقم" (Invoice No.) is written RTL, but the number "12345" is LTR. An OCR engine must be able to correctly interpret the change in direction without distorting the meaning.

For Cyrillic scripts like Russian, Ukrainian and Serbian, the writing is strictly left to right, but it introduces its own set of complexities. Many Cyrillic characters resemble Latin letters, leading to frequent misclassifications. For instance:

The Cyrillic "В" is often mistaken for the Latin "B".

The Cyrillic "С" is misread as the Latin "C".

The Cyrillic "Н" can be confused with the Latin "H".

OCR engines need specialized training to distinguish these characters accurately, especially when processing multilingual documents.

Ligatures & Contextual Shaping

Arabic script is highly cursive, which means that the appearance of each character depends on its position within a word. It changes form when it appears:

Isolated (when standing alone)

Initial (at the beginning of a word)

Medial (in the middle of a word, connected on both sides)

Final (at the end of a word, connected on the right side)

For example, the Arabic letter م (M) looks entirely different in its initial (مـ), medial (ـمـ) and final (ـم) forms. Traditional OCR systems, which recognize characters based on fixed glyph shapes, struggle to identify these variations correctly.

Additionally, Arabic text often includes ligatures — combinations of characters that join together to form a single, unified glyph. The most common example is "لا", which merges two characters, ل (L) and ا (A), into a single connected form. OCR engines must be trained to interpret these ligatures without breaking them apart incorrectly.

Cyrillic, while not cursive in the same way, still has its complexities. Certain letters can appear differently in handwriting versus printed text, requiring OCR engines to adapt to different fonts and styles seamlessly.

Diacritics & Decorative Marks

Arabic relies heavily on diacritics to provide pronunciation and disambiguation of words. These small marks are placed above or below characters and can entirely change the meaning of a word. For example:

كِتاب (kitāb) — "book"

كُتاب (kutāb) — "writers"

كَتاب (katāb) — "wrote"

The only difference between these words is the placement of the diacritic, yet their meanings are entirely different. High-quality OCR must capture these marks with precision to avoid semantic errors.

For Cyrillic, the use of diacritics is less frequent, but characters like "Ё" in Russian still require special attention. OCR engines must recognize when diacritics are part of the character itself and not separate elements.

Mixed-Script Documents

A common use case in emerging markets is the processing of mixed-script documents — like passports, invoices, e-commerce listings, or government forms. These documents often contain Arabic or Cyrillic text alongside Latin characters and numerical digits.

A typical passport entry may have:

Name and address in Cyrillic

Passport number in Latin characters

Birthdate in numeric format

Traditional OCR engines often struggle to interpret this multi-script structure accurately. For instance, they may misread the order of fields or fail to switch character recognition modes dynamically. To tackle this, modern OCR engines are being designed to detect script regions and process them independently, ensuring higher accuracy and consistency.

Impact on OCR Metrics

The unique features of Arabic and Cyrillic scripts directly impact the performance metrics of OCR systems:

Character Error Rate (CER): Higher for cursive and bidirectional texts due to misrecognitions of ligatures and diacritics.

Word Error Rate (WER): Compounded by incorrect contextual shaping and misread mixed-script regions.

Line Segmentation Errors: When RTL and LTR coexist, line breaks are sometimes miscalculated, leading to jumbled text output.

These challenges highlight the need for context-aware OCR systems that can dynamically adapt to script direction, ligature recognition and diacritic placement. The next sections will explore how to overcome these challenges through intelligent image pre-processing, robust recognition engines and effective post-processing techniques.

Image Pre-Processing for High-Fidelity Recognition

Before feeding images into an OCR engine, it is crucial to prepare them through a series of pre-processing steps. This stage is often overlooked but is incredibly important for achieving high accuracy, especially when working with complex scripts like Arabic and Cyrillic. Pre-processing enhances image quality, corrects distortions and optimizes text regions, making it easier for OCR models to interpret text accurately.

Why Image Pre-Processing Matters

The quality of the raw image directly impacts the OCR's performance. A slightly skewed or noisy image can cause text to be misread or entirely missed. For languages like Arabic and Cyrillic, where diacritics, ligatures and contextual letter shaping are critical, pre-processing is not just helpful — it’s essential.

Consider a scanned document with handwritten Cyrillic text. If the image is slightly rotated or blurry, characters like "В" and "В" could be easily misinterpreted. For Arabic, a poor-quality image might lose the fine diacritic marks, changing the meaning of the text. Pre-processing helps to address these issues before they become recognition errors.

Key Pre-Processing Techniques

1. Deskewing & Orientation Correction

When documents are scanned or photographed, they are often slightly tilted. Even a few degrees of skew can disrupt the alignment of text, causing the OCR to misinterpret lines and characters. Deskewing algorithms detect the main text lines and adjust the image to be perfectly horizontal or vertical, as required.

For Arabic text, orientation correction also ensures the right-to-left (RTL) flow is respected. If the document is misaligned, the OCR might interpret RTL text as LTR, flipping words and distorting their meanings. Deskewing algorithms leverage edge detection and Hough Transforms to straighten the text, ensuring optimal alignment.

2. Noise Removal and Smoothing

Images captured with mobile devices or low-resolution scanners often contain visual noise — unwanted pixels that disrupt text clarity. Noise can come from paper texture, smudges, or camera artifacts. Common noise removal techniques include:

Median Filtering: Smoothens pixel values to reduce speckles.

Gaussian Blur: Helps to remove soft noise while preserving edges.

Bilateral Filtering: Reduces noise while keeping edges sharp — perfect for handwritten Arabic and Cyrillic where stroke clarity is crucial.

For Arabic scripts, noise removal is especially important because tiny marks (diacritics) could be mistaken for smudges and the OCR may ignore or misread them.

3. Contrast Enhancement

In poorly lit or low-quality images, text may blend into the background, making it nearly invisible to OCR engines. Contrast enhancement techniques adjust the difference between the text and its background, making it more distinct.

Histogram Equalization: Distributes pixel intensity more evenly, enhancing text visibility.

CLAHE (Contrast Limited Adaptive Histogram Equalization): Localized contrast adjustment to prevent over-brightening.

Gamma Correction: Modifies the brightness levels to reveal hidden text features.

For Cyrillic text, contrast enhancement is particularly useful for distinguishing soft strokes in letters like "Б" or "З", which can be lost in shadowed areas.

4. Binarization Techniques

Binarization is the process of converting an image into pure black and white pixels, making text stand out sharply against the background. Traditional methods like Otsu's Thresholding work well for uniform backgrounds, but Arabic and Cyrillic documents often have varying shades due to paper textures or uneven lighting.

Adaptive Thresholding (e.g., Sauvola or Niblack): Divides the image into small segments and adjusts thresholds locally, enhancing readability in unevenly lit regions.

Dynamic Binarization: Combines global and local techniques to optimize thresholding for mixed lighting conditions.

Arabic script, with its cursive flow and tiny diacritic marks, greatly benefits from adaptive thresholding, which preserves these small details.

5. Region-of-Interest (ROI) Cropping

Documents often contain logos, stamps and borders that are irrelevant for text extraction. By isolating the Region-of-Interest (ROI), we can significantly reduce processing load and improve OCR accuracy.

Text Block Detection: Using edge detection and deep learning models like EAST (Efficient and Accurate Scene Text Detector), we can pinpoint text regions.

Selective Cropping: Isolates text while ignoring surrounding clutter.

Multi-Language Handling: Different ROIs can be processed separately if mixed languages (Arabic, Cyrillic, Latin) are present.

This is particularly effective for government forms, passports and invoices where mixed scripts coexist.

Practical Example: Arabic Invoice Processing

Imagine you need to extract text from an Arabic invoice:

The image is first deskewed to correct alignment.

Noise is removed, clearing smudges and enhancing clarity.

Contrast is boosted, highlighting fine diacritics.

Adaptive thresholding is applied to separate text from the background.

Finally, ROI cropping focuses on important fields like the invoice number, date and line items.

This pre-processed image is now clean, well-structured and ready for high-accuracy OCR.

Optimizing for Mobile Capture

When images are taken with mobile devices, challenges like glare, shadows and perspective distortion are common. Integrating on-device pre-processing — such as auto-deskew, noise reduction and real-time contrast adjustments — can optimize images before they are sent to cloud-based OCR engines. This not only improves recognition rates but also reduces bandwidth by transmitting only clean, focused images.

Image pre-processing serves as the foundation for successful text recognition. The more refined the image, the easier it is for OCR algorithms to interpret it correctly. In the next section, we will explore how recognition engines handle these pre-processed images and how they respect script directions, contextual shaping and mixed-language scenarios.

Recognition Engines That Respect Direction & Context

Pre-processing lays the groundwork, but the real challenge begins when the OCR engine interprets the text. For Arabic and Cyrillic scripts, the engine must handle not only basic character recognition but also directionality, contextual shaping and script-specific nuances. This section dives into how modern OCR models address these complexities to deliver high-accuracy text extraction.

Understanding Bidirectional Text Handling

One of the most complex aspects of Arabic OCR is its right-to-left (RTL) script orientation, which is completely opposite to most Latin-based languages. To add complexity, Arabic text often contains embedded left-to-right (LTR) elements like numerals or Latin words.

Example:

If you have an Arabic address with a phone number:

شارع الملك فيصل 1234

The address reads from right to left, but the number "1234" is read left to right. OCR engines must detect these switches dynamically and parse the text accordingly.

To achieve this, modern OCR engines use bidirectional (BiDi) algorithms that recognize when the script direction changes and adjust the reading order during processing. These algorithms map character sequences and apply logical ordering before text interpretation.

Advanced Neural Architectures for Script Handling

Traditional OCR relied on basic pattern matching, which often led to poor results for complex scripts. Today’s state-of-the-art models use deep learning architectures optimized for language-specific features.

Convolutional Neural Networks (CNNs)

CNNs are responsible for feature extraction, identifying the unique characteristics of each letter, diacritic and ligature. For Arabic, they learn to distinguish between isolated, initial, medial and final forms of characters. For Cyrillic, they differentiate subtle glyph variations that often confuse OCR engines.Recurrent Neural Networks (RNNs)

Because text is sequential, RNNs, particularly Bi-directional LSTM (Long Short-Term Memory) networks, are used to preserve the order and context of characters. This is crucial for:Handling Arabic’s RTL flow and Cyrillic’s LTR flow.

Managing mixed-script documents where direction may switch mid-sentence.

Recognizing the flow of ligatures, which would otherwise be split apart incorrectly.

Transformers for Sequence Modeling

Transformers, a more recent innovation, excel at capturing long-term dependencies without the limitations of RNNs. They use self-attention mechanisms to focus on different parts of the text simultaneously, improving accuracy for long sentences and mixed-language scenarios.

Contextual Shaping & Ligature Detection

Arabic script is unique because its characters change shape depending on their position in a word. For example:

م (Meem) looks different in the initial position (مـ), middle (ـمـ) and final (ـم).

Ligatures like لا (Laam-Alif) are formed when certain characters join together seamlessly.

OCR engines must be trained to identify these changes dynamically. A purely character-based model would break the ligature apart, leading to errors. Instead, modern OCR systems leverage graph-based models that understand how characters link together within the word context.

Example:

In Arabic words like السلام ("Peace"), the character forms are fluidly connected. A basic OCR model might interpret them as separate entities, but an advanced model recognizes the ligature and maintains the connection throughout decoding.

For Cyrillic text, ligatures are less common, but certain fonts and handwritten styles still merge characters in a way that challenges basic OCR. Advanced models use adaptive width detection to prevent character splitting during recognition.

Multi-Headed Output Layers for Mixed Scripts

In many emerging markets, documents are not purely Arabic or Cyrillic. Passports, business cards and legal documents often contain a mix of Arabic, Cyrillic, Latin and even numerals. Traditional OCR engines would require separate models for each script, but modern architectures take a different approach.

Using multi-headed output layers, an OCR engine can recognize multiple languages simultaneously. Each head is responsible for a specific script:

Head 1 → Arabic

Head 2 → Cyrillic

Head 3 → Latin

Head 4 → Numerals

This architecture allows the OCR to seamlessly switch recognition modes based on the detected region, avoiding the need for multiple passes through the engine. It’s a major improvement for applications like passport scanning or international invoices where mixed text is common.

Synthetic Data Generation for Training

One of the biggest challenges in training OCR models for Arabic and Cyrillic is the lack of diverse, annotated datasets. To address this, developers use synthetic data generation:

Arabic and Cyrillic fonts are rendered in different styles, sizes and noise conditions.

Ligatures, diacritics and even handwriting are synthetically generated to teach models how to handle real-world diversity.

Background clutter, blur and varying light conditions are added to simulate real camera captures.

Synthetic datasets enable models to learn edge cases that are rarely covered in traditional datasets. This boosts their ability to handle noisy or low-resolution images captured by mobile devices.

Error Metrics and Model Evaluation

To measure the effectiveness of these advanced OCR models, common error metrics are used:

Character Error Rate (CER): The percentage of characters misrecognized.

Word Error Rate (WER): The percentage of words incorrectly identified.

Ligature Error Rate (LER): Specific to scripts like Arabic, measuring how often ligatures are split or misread.

Diacritic Error Rate (DER): Critical for Arabic; measures the misplacement or omission of diacritics.

High-performance models consistently optimize these rates through iterative training and real-world testing.

Real-World Application: Arabic ID Card Scanning

Imagine you need to extract information from an Arabic ID card:

Pre-processing: The image is deskewed, noise is removed and the contrast is enhanced.

Recognition: A deep-learning-powered OCR engine identifies text, respecting RTL flow, ligatures and contextual shaping.

Multi-head output: The system detects Latin script for the ID number and Arabic script for the personal details, processing them simultaneously.

Post-processing: The text is spell-checked and formatted for structured output.

The final result is a highly accurate digital version of the ID card information, ready for database entry or further processing.

In the next section, we will explore how to optimize the recognition of ligatures and resolve glyph ambiguities that are especially challenging for Arabic and Cyrillic scripts. With the right strategies, you can significantly enhance the accuracy and reliability of OCR for emerging markets.

Ligature-Aware & Glyph-Ambiguity Resolution

When it comes to Arabic and Cyrillic text recognition, one of the major hurdles that OCR engines face is handling ligatures and resolving glyph ambiguities. These scripts are unique in how characters connect and how visually similar certain letters can be. If not handled correctly, these subtle differences can lead to significant recognition errors. In this section, we will explore the challenges ligatures and glyph ambiguities present, along with the modern techniques used to overcome them.

Understanding Ligatures in Arabic Scripts

Arabic is a cursive script, meaning most of its characters connect fluidly within words. This is particularly different from Latin scripts, where each letter is generally separate. Arabic characters change shape depending on their position in a word:

Isolated: When the character stands alone.

Initial: When it is at the beginning of a word.

Medial: When it appears in the middle of a word, connected on both sides.

Final: When it is at the end of a word, connected on the right side.

For example, the Arabic letter م (Meem) appears as:

مـ in the initial form

ـمـ in the medial form

ـم in the final form

A standard OCR engine that is not trained for Arabic will often fail to recognize these variations, seeing them as entirely different letters. Advanced OCR engines, however, are trained on large datasets with every possible form of each letter, improving their ability to match the correct character regardless of its position.

The Role of Ligatures

Ligatures are even more complex. These are special connections between two or more characters that form a single, inseparable glyph. One of the most common Arabic ligatures is لا (Laam-Alif), which joins the letters ل (Laam) and ا(Alif) into a single shape. This is not just visual styling — it's how the word is written in standard Arabic.

Challenges with Ligatures:

Breaking the Connection: Simple OCR algorithms may split ligatures into separate characters, resulting in incorrect text.

Shape Variation: Ligatures may appear differently depending on the font or handwriting style, making it difficult for rigid models to identify them correctly.

Contextual Sensitivity: Some ligatures are only formed in specific word contexts, adding another layer of complexity.

Solutions for Ligature Recognition:

Modern OCR engines utilize:

Graph-based Representations: Characters are mapped as nodes and their connections (ligatures) as edges. This allows the model to recognize joint forms as a single entity.

Dilated Convolutions: These help the model understand larger receptive fields, capturing the full extent of a ligature instead of just a fragmented part.

Positional Awareness: Models are trained to understand that certain character sequences are expected to form ligatures, reducing the chance of incorrect splitting.

Resolving Glyph Ambiguities in Cyrillic Scripts

While Cyrillic does not form ligatures like Arabic, it introduces a different challenge: glyph ambiguity. Many Cyrillic letters look visually similar to Latin characters, which often leads to confusion during text recognition.

Common Glyph Ambiguities:

Cyrillic В vs Latin B

Cyrillic С vs Latin C

Cyrillic Н vs Latin H

Cyrillic У vs Latin Y

When OCR engines misinterpret these characters, it can lead to incorrect words and mistranslations. This is especially problematic for documents that mix both Latin and Cyrillic text, such as international forms or passports.

Techniques for Glyph Resolution:

Contextual Analysis: By analyzing the surrounding characters, modern OCR models can predict the most likely script. For instance, if the text is predominantly in Cyrillic, a letter that resembles "C" is more likely to be Cyrillic С.

Language Modeling: Applying a language model during post-processing helps to auto-correct ambiguities based on the expected vocabulary.

Hybrid Decoding: Models use multi-headed decoding layers to dynamically switch between character sets during processing, improving recognition accuracy.

Feature Fusion for Handwriting and Cursive Text

Cyrillic, especially in handwritten form, can have cursive joins similar to Arabic. Characters like Т and Г may merge with neighboring letters in cursive script. Traditional OCR engines that rely purely on pixel-level analysis struggle with this.

To address this, modern models use feature fusion techniques:

Stroke Width Analysis: Identifies handwritten strokes as a series of connected lines rather than isolated pixels.

Curvature Detection: Handwritten loops and curls are detected through curvature analysis, ensuring letters are not split inaccurately.

Multi-scale CNNs: These capture fine details like loops and crossings, even when written in faint ink.

Real-World Example: Invoice Processing in Cyrillic and Arabic

Consider the scenario where you need to extract information from an international invoice containing both Arabic and Cyrillic text:

Pre-Processing: Noise is removed and contrast is enhanced.

Ligature Recognition: Arabic ligatures like لا and ـمـ are correctly interpreted as single characters, not split into fragments.

Glyph Disambiguation: Cyrillic С is identified correctly, even if it looks like Latin C.

Contextual Understanding: The model uses surrounding words to determine the correct script, minimizing errors.

The result is a clean, fully digitized invoice, ready for database entry or further processing.

Future of Ligature and Glyph-Aware OCR

The development of contextual text embeddings and script-aware transformers is pushing the boundaries of multilingual OCR. These models are designed to learn not only individual characters but also how they interact with each other contextually. This is particularly promising for mobile capture, where real-time recognition of ligatures and glyph ambiguities is critical.

With advanced techniques for ligature handling and glyph disambiguation, OCR engines are now far better equipped to process Arabic and Cyrillic scripts with high accuracy. In the next section, we will explore how language-model post-processing further enhances text recognition, ensuring that even subtle errors are caught and corrected before they reach the end user.

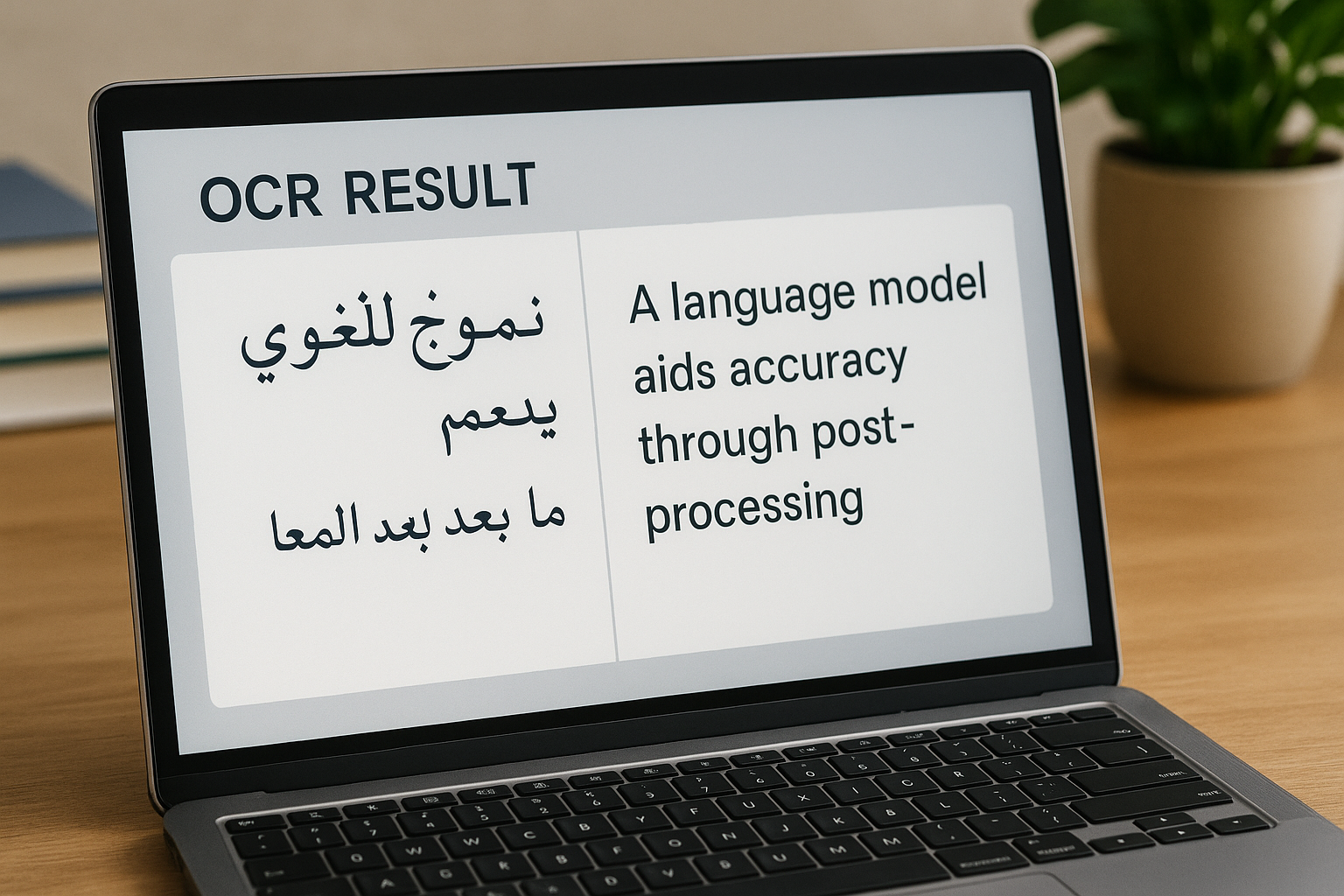

Language-Model Post-Processing for Production-Grade Accuracy

Even with advanced pre-processing, precise recognition engines and effective ligature handling, OCR outputs are rarely perfect right out of the model. Text extracted from images often contains small errors — misread characters, missed diacritics, or even incorrect word sequences. This is especially true for complex scripts like Arabic and Cyrillic, where contextual meaning can significantly change with even a single character swap.

To achieve production-grade accuracy, OCR systems increasingly rely on language-model post-processing. This step acts as a final filter, correcting mistakes, smoothing out ambiguities and ensuring the text is as close to human-readable as possible.

The Role of Language Models in OCR

A language model (LM) is essentially a tool that understands the statistical relationships between words in a specific language. When integrated into OCR pipelines, LMs help to:

Fix common recognition errors: Correct characters that were mistakenly identified during the initial OCR scan.

Handle contextual ambiguity: Select the correct word or phrase based on sentence structure.

Restore diacritics and punctuation: Arabic, in particular, depends on diacritics for meaning, which are often missed during basic OCR.

Ensure grammatical correctness: Prevents awkward or nonsensical text by leveraging real-world language patterns.

For instance, if an OCR engine reads an Arabic word as "كتب" (wrote) but the context suggests it should be "كتاب" (book), the language model can automatically correct it based on the surrounding text.

Key Techniques in Language-Model Post-Processing

1. Character-Level n-Gram Correction

In Cyrillic and Arabic OCR, character swaps or deletions are common. To combat this, LMs use n-gram analysis — a statistical method that looks at sequences of characters to predict the next one or to validate what has been read.

Example:

If the OCR output for a Russian word is "погрмма" instead of "программа" (program), the n-gram model identifies that "г" is unlikely to follow "о" in typical Russian word structures. It suggests "программа" as the correction, boosting accuracy.

This technique is particularly useful for handling:

Transposition errors (го → ог)

Character insertions (прграмма → программа)

Deletions (пограма → программа)

2. Subword Transformers (BPE, SentencePiece)

Many Cyrillic and Arabic words are morphologically rich, meaning they use various prefixes, suffixes and root alterations. Traditional models that look at whole words often fail to catch these nuances.

To address this, advanced OCR systems integrate subword models like Byte Pair Encoding (BPE) and SentencePiece. These models break words into smaller, meaningful segments — subwords — that are easier to recognize and reassemble.

Example:

In Arabic: "المكتبة" (the library) → ال + مكتبة (prefix + root word)

In Cyrillic: "программирование" (programming) → программ + ирование

Subword analysis ensures that even if part of the word is misrecognized, the model can still infer the correct output based on subword frequency and structure.

3. RTL-Aware Spell Checkers

Spell checkers have traditionally been designed for left-to-right languages, but for Arabic and Hebrew, a simple left-to-right correction can scramble the text. To counter this, RTL-aware spell checkers are applied during post-processing.

How it Works:

The spell checker respects RTL flow, suggesting fixes in the proper direction.

Contextual awareness allows it to understand if the mistake is semantic (wrong word choice) or visual (misread character).

It handles mixed RTL/LTR text correctly, avoiding directional flips.

Example:

If the OCR reads an Arabic sentence as:

السلامه عليكم

The RTL-aware spell checker can recognize that the word should be "السلام عليكم" ("Peace be upon you"), correcting the error seamlessly.

4. Contextual Re-Scoring with Language Models

One of the more advanced techniques for OCR text correction is contextual re-scoring. During this process, the raw OCR output is scored against a pre-trained language model to assess how natural or logical the text is.

How it Works:

The language model evaluates each word and its neighbors.

If a word looks statistically out of place, the model suggests a correction.

For mixed-script documents, it switches language models dynamically to fit the text region (e.g., Cyrillic vs Arabic).

Example:

If the raw OCR reads:

"اهلا بكم في الموكب"

But the intended phrase was:

"أهلاً بكم في المكتب" (Welcome to the office)

The model understands "الموكب" (parade) is highly unusual in this context and re-scores it to "المكتب" (office) for semantic correctness.

5. On-Device Lightweight Language Models

For mobile applications where bandwidth is limited, on-device language models perform basic error correction before sending data to the cloud for heavy processing. This setup is especially important for:

Offline processing: Allows OCR to work without internet connectivity.

Low-latency correction: Users see text corrections in real time, improving UX.

Reduced cloud costs: Less data is sent back and forth, optimizing bandwidth.

For instance, an offline mobile banking app with Arabic text scanning can locally correct diacritic errors before uploading the final version to the server.

Real-World Application: Passport Scanning in Arabic and Cyrillic

Let’s imagine a scenario where an international traveler is scanning their passport for an airline's mobile app. The passport contains both Arabic and Cyrillic text alongside Latin characters.

Pre-processing: Image is deskewed, noise is removed and regions of interest are cropped.

OCR Recognition: Arabic, Cyrillic and Latin characters are recognized simultaneously using a multi-headed model.

Post-Processing:

Character-level n-gram analysis fixes minor typos.

Subword models identify roots of long Cyrillic words.

RTL-aware spell check corrects directional mistakes.

Contextual re-scoring adjusts any odd word choices.

The final output is a clean, structured text extract ready for form submission, with virtually no errors.

Boosting Production-Grade Accuracy with Language Models

Language models are proving to be the cornerstone for pushing OCR systems from functional to flawless. By understanding word patterns, correcting character mistakes and predicting text flow, these models turn raw OCR outputs into polished, usable data.

In the next section, we will tie all of these elements together — pre-processing, recognition, ligature handling and post-processing — to showcase how developers can build robust, multilingual OCR solutions that thrive in emerging markets.

Conclusion — Shipping Inclusive OCR Without Reinventing the Wheel

The journey of building effective OCR solutions for Arabic and Cyrillic scripts is complex but rewarding. Emerging markets in the Middle East, North Africa, Central Asia and Eastern Europe are rapidly growing their digital footprints and businesses that cater to their linguistic needs can unlock massive new user bases. However, the path to seamless text extraction for right-to-left (RTL) and Cyrillic scripts is filled with technical challenges: bidirectionality, ligatures, diacritics and mixed-script environments.

In this final section, we’ll bring together everything we've discussed — image pre-processing, recognition engines, ligature awareness, glyph disambiguation and language-model post-processing — to highlight the key takeaways and best practices for shipping inclusive, high-accuracy OCR solutions.

Key Takeaways for Developers

Creating multilingual OCR applications that excel in Arabic and Cyrillic text recognition requires a strategic and layered approach. Here’s a recap of the critical elements:

Effective Pre-Processing Is Essential

Before an image even reaches the recognition engine, it must be optimized. Techniques like deskewing, noise removal and adaptive thresholding significantly enhance text clarity, reducing errors in the OCR stage. For Arabic, it ensures diacritics are visible and for Cyrillic, it helps distinguish visually similar characters.Context-Aware Recognition Engines

Modern OCR engines, powered by convolutional neural networks (CNNs), recurrent neural networks (RNNs) and transformers, handle complex scripts more intelligently. Bidirectional LSTMs respect the natural flow of Arabic, while multi-headed transformers recognize Cyrillic and Latin within the same document.Ligature Handling and Glyph Disambiguation

Arabic’s fluid ligatures and Cyrillic’s lookalike glyphs require special attention. Advanced OCR models identify ligature joins and distinguish Cyrillic from Latin characters in real time, preventing common misreads.Language-Model Post-Processing

Once text is extracted, post-processing powered by language models polishes the final output. Character-level n-grams correct typos, subword transformers handle compound words and RTL-aware spell checkers ensure directional integrity. This step transforms raw, sometimes flawed OCR output into production-grade text.

Build vs Buy: Making the Right Choice

When it comes to building inclusive OCR solutions, the choice often comes down to build vs buy. Developing a custom OCR engine that handles Arabic and Cyrillic at a high level of accuracy is a significant investment. It requires:

Training datasets for different fonts, handwriting styles and noisy backgrounds.

Deep learning models fine-tuned for bidirectional text and contextual shaping.

Ongoing maintenance to improve ligature detection and multi-script switching.

For many companies, leveraging ready-made OCR APIs, like those offered by API4AI, is a smarter choice. These APIs come pre-trained on diverse datasets and are optimized for multilingual text extraction. This allows development teams to:

Accelerate time to market by integrating high-accuracy OCR in days, not months.

Reduce R&D costs tied to building custom deep learning models.

Scale effortlessly with cloud-based deployments that handle large volumes of scanned documents.

Real-World Applications That Benefit from Multilingual OCR

The use cases for Arabic and Cyrillic OCR are diverse and impactful. Here are some of the most promising applications:

Banking & Financial Services

Arabic and Cyrillic-speaking regions are experiencing rapid growth in mobile banking. OCR can streamline the onboarding process by digitizing IDs, invoices and contracts in seconds, reducing manual input errors.Government Forms & ID Verification

Scanning passports, national IDs and tax documents in Cyrillic or Arabic is now faster and more accurate with modern OCR. This speeds up border control, government services and public administration.E-commerce & Logistics

Arabic-language shipping labels or Cyrillic invoices are seamlessly digitized, allowing for quicker data entry, package tracking and automated order processing.Healthcare Documentation

Medical records, prescriptions and patient forms written in Cyrillic or Arabic can be instantly converted to digital formats, improving record-keeping and patient service.Legal & Contract Management

Digitizing Arabic or Cyrillic legal documents enables faster searching, automated compliance checks and more efficient archiving.

Next Steps for Developers and Product Teams

If you’re considering building an application that serves Arabic- or Cyrillic-speaking markets, integrating a robust OCR solution is a critical step. Here’s how to get started:

Prototype with Cloud-Based OCR APIs

Begin with a cloud-based OCR API that supports Arabic and Cyrillic. This allows you to test recognition accuracy without heavy upfront investment. API4AI’s OCR API, for instance, offers high accuracy for multilingual text extraction and is ready for real-world deployment.Test in Real-World Conditions

Use real images — photos of passports, invoices, or shipping labels — to benchmark performance. Pay attention to diacritic handling in Arabic and letter differentiation in Cyrillic.Refine with Custom Language Models

For mission-critical applications, consider enhancing the OCR pipeline with custom-trained language models for better post-processing. This ensures spelling, word context and grammar are polished before final submission.Scale and Optimize

Once the prototype is successful, scale up by deploying the OCR service across your application infrastructure. Optimize for latency, especially for mobile capture, to keep the user experience seamless.

Final Thoughts: Unlocking Emerging Markets with OCR

Arabic and Cyrillic text recognition is no longer a barrier for global application development. Modern OCR technologies, combined with intelligent pre-processing and language-aware post-processing, are transforming how multilingual text is digitized and processed. For developers and businesses looking to expand into emerging markets, investing in effective OCR capabilities isn’t just a technical enhancement — it’s a strategic advantage.

By leveraging the right tools and best practices, you can create inclusive, accessible applications that serve millions of new users in rapidly growing regions. The path forward is clear and the opportunities are vast.