Contract Compliance Bot: Logo Specs vs. Reality

Introduction — Why Compliance Automation Now

Sponsorship dollars aren’t buying “exposure” in the abstract anymore; they’re buying compliance with specifics. Contracts spell out the minimum logo size (percent of frame), color accuracy (tolerance against the brand palette), on-screen duration (seconds), and sometimes placement zones and clarity. When those parameters aren’t met — or can’t be proven — you get make-goods, contested invoices, delayed cash, and eroded margins. A modern C-suite framing is simple: treat sponsor compliance like revenue assurance.

What a Contract Compliance Bot is: think of it as an automated, clause-aware audit that runs on your feeds or files before finance issues invoices. It detects logos frame-by-frame, computes size as a share of the frame, measures color drift (e.g., via perceptual differences like ΔE), accrues continuous on-screen seconds per appearance, and checks placement/clarity. Each clause gets a pass/fail, and the system assembles an evidence pack (timestamps, reference frames, logs) so legal, sales, and partners share a single source of truth. The output is not a pretty dashboard; it’s a pre-invoice gate.

Why now: three forces converged. First, measurement norms around “what counts” are maturing across media, giving executives an intuitive baseline for thresholds — e.g., widely referenced viewability rules for video use a 2-second minimum as a meaningful attention proxy. While sponsorships aren’t the same as digital ad placements, this mindset has normalized time-and-pixels thinking for verification (IAB).

Second, AI vision has become reliable enough for production auditing at broadcast scale (edge or cloud). Third, sponsors are demanding evidence-backed valuation, and industry leaders operationalize logo exposure, time on screen, and quality weighting to price and prove impact — exactly the type of proof your sales and finance teams need at close (Nielsen Sports).

Executive outcomes to expect:

Fewer disputes and make-goods: Clause-level pass/fail with attached evidence reduces back-and-forth and protects rate cards.

Faster cash: Invoices go out with proof embedded, lowering DSO and smoothing quarter-end.

Negotiation leverage: Reliable screen time, share of voice, and quality-weighted exposure bolster renewals and upsells.

Operational defensibility: An auditable trail that satisfies sponsors, agencies, and internal control owners.

How it works at a high level:

Ingest & normalize program feeds.

Detect & track brand marks with a Brand Recognition API (logo detection at frame level).

Calculate & compare size, color, duration, and placement to the contract.

Decide & package pass/fail plus evidence.

Gate finance: only compliant exposures (or approved exceptions) flow to invoicing.

This post explains how to translate legal clauses into machine-checkable rules, architect an automated audit your CFO will trust, and phase it in with minimal workflow disruption — so compliance becomes a real-time capability, not an after-the-fact scramble.

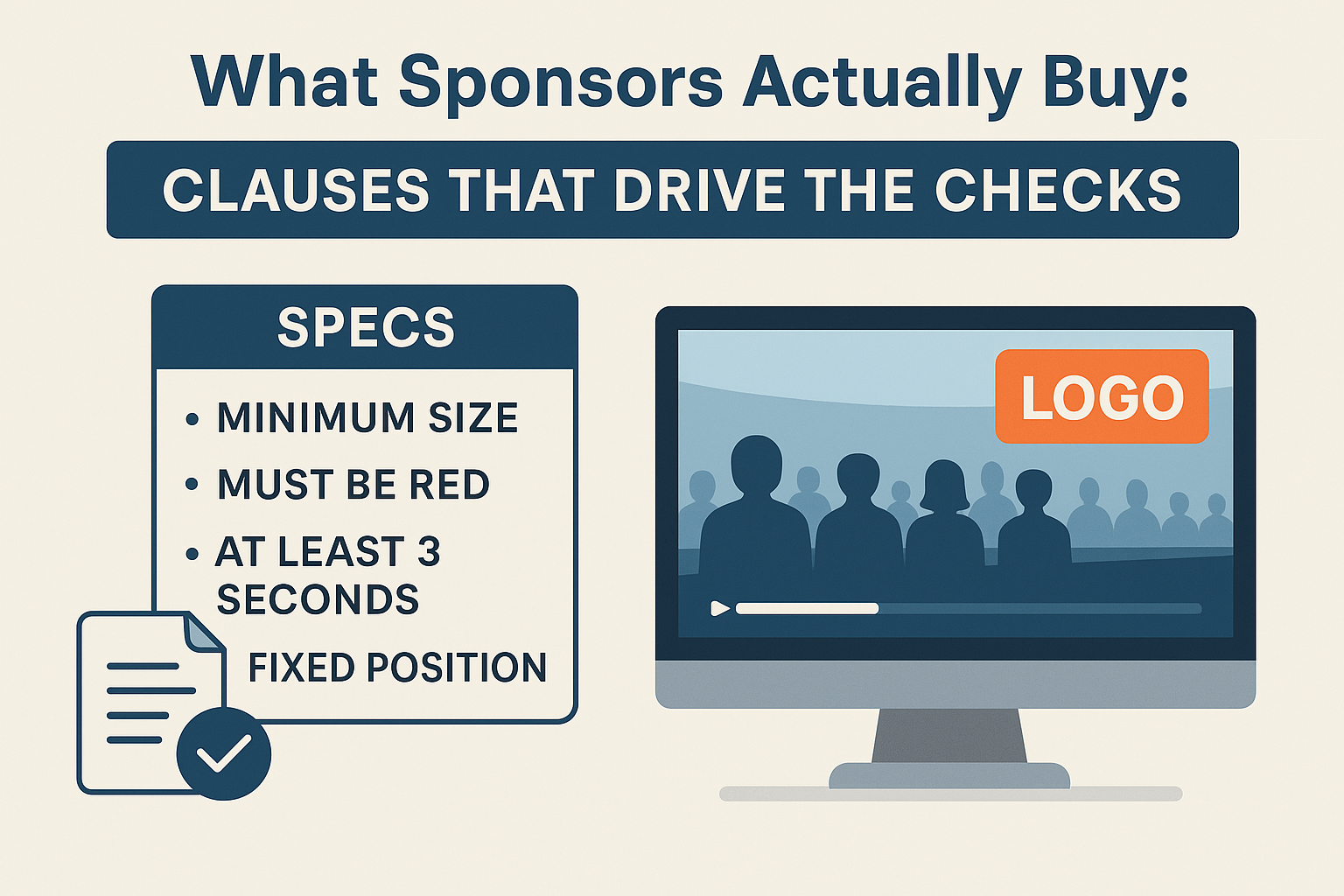

What Sponsors Actually Buy: Clauses That Drive the Checks

Sponsorship contracts don’t buy “exposure” in general — they buy specific, measurable delivery. Four clauses appear in nearly every agreement and can be turned into machine checks that your finance team can trust.

1) Minimum logo size (share of frame).

Contracts typically specify that a mark must occupy at least a certain percentage of the visible frame (or a minimum pixel area) to count. In practice, the compliance bot converts each detection’s bounding box into % of frame and compares it to the threshold. The same rule applies across resolutions (HD/4K) and aspect ratios, so the measurement stays consistent across feeds, replays, and social cut-downs. Edge cases worth encoding: cropped marks (only partly visible), extreme perspective (foreshortening on LED boards), and zoom jump cuts (brief spikes in size that shouldn’t inflate totals).

2) Minimum on-screen duration (continuous seconds).

The requirement is almost always framed as a continuous time threshold per appearance (e.g., “≥3 seconds to count”). While sponsorship isn’t the same as digital ad viewability, the operational mindset is similar: a defined time-and-pixelsthreshold ensures what counts as a real opportunity to see. A common point of reference in video measurement is the industry’s “50% of pixels for 2 seconds” baseline, which has helped executives standardize duration thinking; sponsorship teams often adopt analogous thresholds to keep proofs unambiguous (IAB).

3) Color fidelity (brand palette tolerance).

Brand books usually fix primary and secondary colors and define an acceptable tolerance band. The bot samples the detected region and calculates ΔE (Delta E) — a perceptual color-difference metric — against the brand palette, then flags anything outside tolerance (e.g., stadium lighting shifting a red to orange). This keeps invoices defensible even under difficult conditions like mixed lighting, HDR, or LED moiré (xrite.com).

4) Placement zones and exclusivity.

Clauses often restrict where a logo may appear: safe zones within uniforms, boards, or overlays; no-overlap with competitors; and no obstruction by lower-thirds or crowd elements. The system checks whether detections lie inside approved polygons (e.g., LED board face, jersey chest) and whether any occlusions or competitive marks coincide. If a rule is violated, the moment is marked non-compliant and routed for review.

5) Clarity/readability (quality thresholds).

Even when size and duration pass, poor clarity (motion blur, extreme angle, low contrast, heavy occlusion) can void the impression. A lightweight quality score — derived from sharpness, contrast, and visible fraction of the logo — keeps “technical passes” from slipping into invoices when they wouldn’t survive a dispute.

6) Correct usage (brand governance).

Some agreements require the current mark only (no outdated lockups), consistent aspect ratio, and orientation. Template checks and simple geometry tests catch stretched, rotated, or monochrome versions when full-color use is mandatory. OCR can be added for wordmarks or to police disallowed categories appearing in shot (e.g., alcohol/NSFW rules in family programming).

7) Multi-feed aggregation and deduplication.

Live productions juggle many cameras. The bot must deduplicate simultaneous appearances across feeds to avoid double-counting and aggregate them into a single, auditable exposure timeline. This aligns the proof with how the audience actually experienced the program.

What this gives the C-suite.

Translating clauses into explicit rules — size, seconds, color tolerance, zones, clarity — produces pass/fail evidence at the moment of delivery. Sales gets renewal leverage, finance gets pre-invoice assurance, and legal gets an audit trail that short-circuits disputes.

Where the building blocks come from.

The detection core is a logo recognition API; add OCR for disclaimers/boards, image anonymization for privacy, and optional object/scene labelling for context (e.g., “on LED board,” “on jersey”). For readers evaluating implementation paths, a modern Brand Recognition API can anchor size/duration tracking on day one, while color/placement/quality checks layer in as policy modules during rollout.

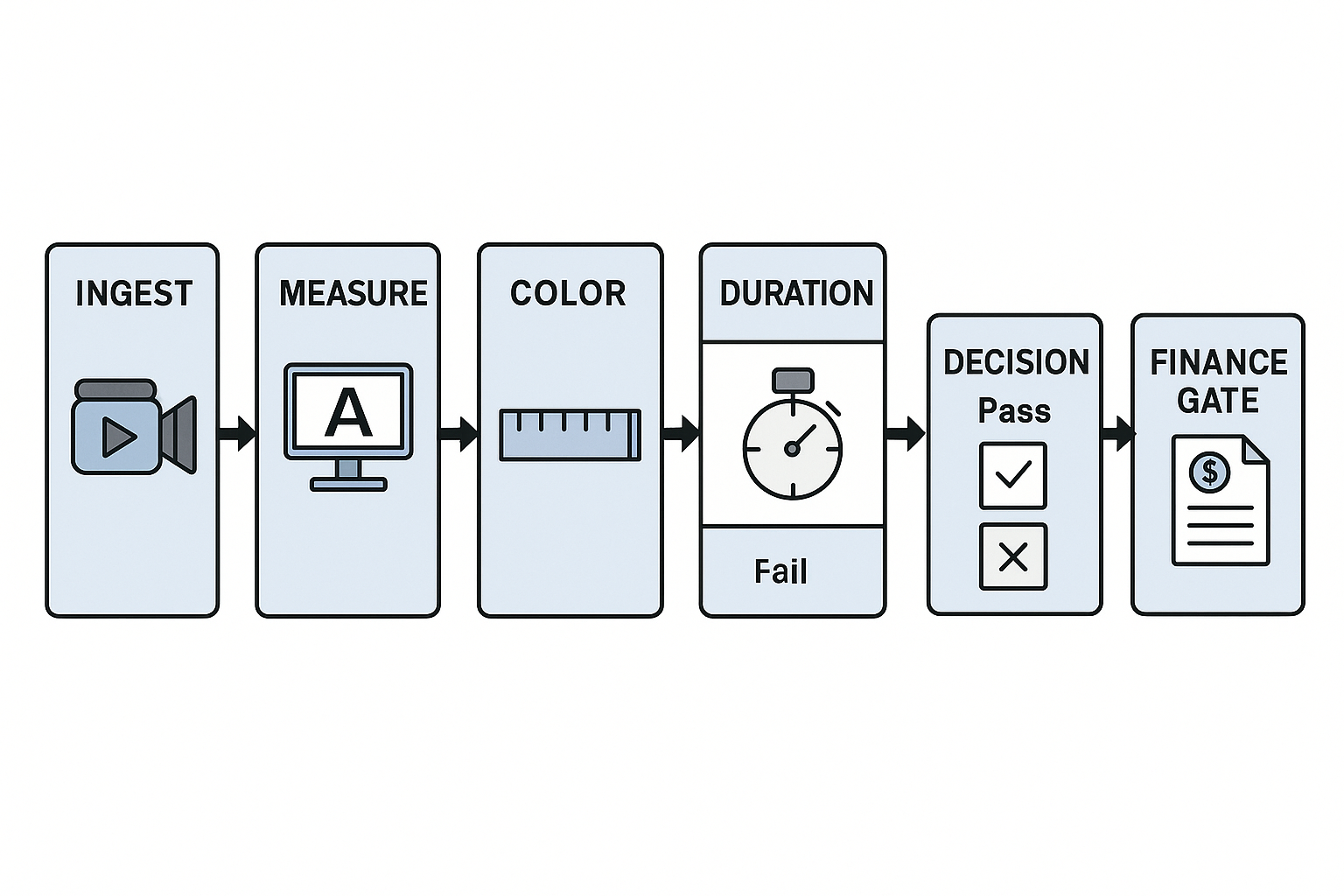

From Specs to Checks: The Compliance Bot Workflow

A Contract Compliance Bot turns contract language into a repeatable, auditable pipeline. For executives, the key is that every step produces evidence your finance, legal, and sales teams can rely on — before an invoice goes out.

1) Ingest & normalize the footage

Pull live or recorded feeds from trucks, OTT encoders, or social edits. Normalize frame rate, resolution, and color space so measurements (like “percent of frame”) are consistent across HD/4K, SDR/HDR, and different aspect ratios. Multi-camera productions are handled as a single timeline so what the audience actually saw is the source of truth.

2) Detect and track the brand marks

Use a logo-recognition block to find marks frame-by-frame, then assign them a stable identity as cameras switch and subjects move. Tracking is what prevents double counts across angles and lets you consolidate many micro-detections into a single appearance. (A ready-to-use Brand Recognition API is typically the fastest way to stand this up; it becomes the foundation for all downstream checks.)

3) Measure size and placement against the clause

Convert each detection to share of frame and compare with the minimum area required. Then confirm where the logo sits: permissible zones on LED boards, uniforms, and overlays are modeled as polygons, and simple geometry verifies that the mark stays inside the allowed region and isn’t hidden behind lower-thirds or crowd occlusions.

4) Verify color fidelity with objective tolerances

Color is tested against the brand palette using a perceptual color-difference metric (ΔE). Working in a device-independent space (e.g., CIELAB), the bot computes CIEDE2000 ΔE and flags anything outside the tolerance band — critical under mixed stadium lighting or HDR tone mapping. This anchors disputes to a standard, not opinions (cie.co.at).

5) Accrue duration and apply quality gating

Appearances are stitched into continuous seconds on screen, then filtered by a lightweight quality score (sharpness, visible fraction, contrast) so “technical passes” don’t slip through when the logo is blurred or mostly blocked. The logic mirrors the industry’s broader time-and-pixels mindset for proving an “opportunity to see,” providing executives with a familiar reference point for what counts (IAB).

6) Decide and package the evidence

A clause-aware rules layer issues pass/fail per requirement with reason codes (e.g., AreaBelowMin, ColorOutOfTolerance, DurationShort). The system assembles an evidence pack — timecodes, thumbnails or clips, and a concise log — so sponsors and agencies can verify without re-watching hours of video.

7) Gate the invoice and escalate edge cases

Only exposures that pass all required checks (or have an approved exception) flow into billing. Borderline cases (e.g., ΔE within a narrow band or momentary occlusion) are sent to a reviewer for a quick human decision. This keeps finance moving, reduces make-goods, and shortens DSO without slowing the production team.

Deployment notes for leaders

Real-time vs. overnight: Real-time auditing helps producers react during the event; overnight batch is often sufficient for pre-invoice gates.

Privacy & governance: If faces or plates appear in evidence frames, an image anonymization block can mask them automatically, and retention windows align with contract terms.

Systems integration: Exports drop straight into your invoicing or rights-management system and a sponsor-friendly portal, so the same proof powers billing, renewals, and board-level reporting.

By operationalizing these steps, compliance shifts from manual spot-checks to a clause-driven, evidence-first workflow that protects revenue and speeds cash.

Controls, Governance & Risk

A compliance bot is only as credible as its controls. The goal is defensibility: when a sponsor questions an invoice, you can point to clear policies, versioned rules, and an audit trail that stands up to scrutiny.

Policy foundation (what you enforce).

Brand safety & suitability. Anchor your policies to widely recognized frameworks so language is consistent across legal, sales, and ops. The GARM Brand Safety & Suitability Framework is a useful reference point for shared definitions across categories and risk levels (wfanet.org).

Privacy-by-design. If evidence frames may contain people or license plates, run automatic anonymization(face/plate blurring) and restrict access to originals. For high-risk processing (e.g., large-scale video analysis), require a Data Protection Impact Assessment (DPIA) with documented mitigations and retention limits (ICO).

Governance model (how you enforce it).

Rule governance & versioning. Treat each contract as a set of machine rules (size, duration, color, zones). Version the rule pack with effective dates; freeze it per campaign so results remain reproducible during disputes.

Separation of duties. Keep ops (who tweak thresholds) separate from finance (who release invoices). Require dual control for rule changes mid-campaign.

RBAC & least privilege. Evidence packs (frames, timecodes, logs) are sensitive — apply role-based access controls and short-lived, expiring links when sharing externally.

Change control & sign-off. Borderline thresholds (e.g., ΔE tolerance, quality score cutoffs) move only via a ticketed approval with a business owner’s sign-off.

Evidence you can defend.

Chain of custody. Stamp each exposure with hash, model/rule versions, and time of compute. Store exact reference frames and the reason code (e.g., AreaBelowMin, DurationShort).

Retention & deletion. Define how long evidence is kept (often tied to the contract’s challenge window), then auto-purge — anonymized derivatives may be retained longer for benchmarking.

Sponsor-ready packets. Package findings into concise, shareable reports: pass/fail per clause, top exceptions, and a link to evidence. This shortens back-and-forth and speeds collections.

Risk management (what can go wrong and how you mitigate).

False positives/negatives. Maintain a golden dataset of labeled segments; sample a fixed % of passes and fails for human review. Track precision/recall as operational KPIs.

Model drift & lighting variance. Monitor performance by venue/league; alert when quality or ΔE exceptions spike (e.g., new LED boards, HDR tone mapping).

Spec ambiguity. Convert vague clauses into explicit thresholds before go-live; if a clause remains subjective (e.g., “prominent”), add a human-in-the-loop checkpoint.

Operational continuity. If real-time checks are unavailable, fall back to batch within a defined SLA so invoices don’t slip. Keep a backfill queue and clear retry logic.

Controls in the pipeline (practical blocks).

Detection/measurement: a logo recognition block provides identity, size as % of frame, and duration accrual.

Color assurance: compute perceptual color difference (e.g., ΔE) against brand palette; log tolerance breaches.

Placement & occlusion: polygonal “safe zones,” overlap checks with graphics, and visibility scoring.

Privacy & legal: image anonymization before evidence leaves the system; DPIA on file; retention timers baked into storage.

Executive upshot.

With clear policies, versioned rules, strong access controls, and audit-ready evidence, the compliance bot becomes a pre-invoice gate your CFO and counsel can trust. Disputes drop, DSO shortens, and renewals get a lift because you’re selling not just exposure, but provable delivery.

The Metrics That Matter to the C-Suite

For leaders, the value of a compliance bot is simple: less leakage, faster cash, stronger pricing power. That only happens if the system outputs a small, unambiguous scorecard your CFO, CRO, and General Counsel can all read the same way. Below are the metrics that translate technical detections into board-level impact.

Compliance & Risk (operate the gate)

Compliance Rate — share of all candidate exposures that meet every clause (size, duration, color, placement, clarity). This is your headline KPI; anything <95% signals avoidable write-offs.

Clause Pass Rates — per-rule pass/fail (e.g., “Duration met,” “ΔE within tolerance”). These isolate the why behind misses so production can fix lighting, graphics, or camera habits fast.

Exception/Dispute Rate — proportion routed to human review or escalated by sponsors. Aim to shrink this quarterly as rules stabilize and evidence quality improves.

Evidence Coverage — percent of invoice line items with attached, shareable evidence (frames, clips, logs). This is your first defense against make-goods.

Finance (protect and accelerate cash)

Make-good Avoidance ($) — the dollar value of exposures that would have been disputed but were identified and corrected pre-invoice.

Credit Notes Avoided ($) — credits you didn’t issue because clause-level proof was attached.

DSO Reduction (days) — days shaved off collections because invoices ship with evidence and fewer back-and-forths.

Write-off Rate — trend this down as the gate hardens.

Commercial Value (negotiate from strength)

Screen Time (hh:mm:ss) — continuous, deduplicated seconds per brand across the program feed.

vSOV (Visual Share of Voice, %) — a brand’s proportion of on-screen presence relative to others, combining time and visual real estate. Use this to benchmark properties and justify rate cards in renewals.

Exposure Score — a quality-weighted index that multiplies size × position weight × duration × clarity (and zeroes out any clause fails). This mirrors how leading valuation models adjust raw seconds for prominence and readability (Nielsen Sports).

CPeS (Cost per Exposure Second) — rights cost divided by compliant seconds. This becomes your apples-to-apples cost metric across events, venues, and leagues.

How to compute them (executive plain-English recipes)

Screen Time = continuous seconds where the logo is present without interruption.

vSOV = (a brand’s screen time × average share-of-frame) ÷ (sum across all brands).

Exposure Score = screen time × area factor × position factor × clarity factor; any color/placement violations set the factor to zero for those moments.

Compliance Rate = compliant exposures ÷ all detected opportunities.

CPeS = rights/activation spend ÷ compliant seconds.

Decision triggers that change behavior

Low duration pass rate → fix replay/editorial pacing, extend stings or bumps.

Frequent color fails (ΔE out of tolerance) → adjust LED and HDR tone-mapping, revisit palette for night games.

Below-goal vSOV → proactively schedule make-goods or re-balance camera plans before the next fixture.

High dispute rate → tighten evidence packs and clarify rule language with sponsors.

Why these benchmarks “click” in the boardroom

Executives already accept time-and-pixels thresholds in digital video (e.g., the widely referenced 50% of pixels for at least two seconds viewability standard). Sponsorship verification isn’t identical, but aligning your definitions to these familiar thresholds makes proofs intuitive and faster to approve. IAB

Where the numbers come from

A modern logo recognition block provides identities, bounding boxes, and continuity over time; from there, it’s straightforward to calculate screen time, % of frame, vSOV, and quality-weighted Exposure Scores. Connectors then push the pass/fail plus evidence directly into invoicing and sponsor-facing reports. If you need to stand this up quickly, a ready-to-use Brand Recognition API can anchor the measurements while color tolerance, zone rules, and anonymization are layered on as policy modules.

Bottom line: when these KPIs are standardized and automated, compliance becomes a pre-invoice checklist, not a post-mortem. You’ll issue fewer credits, collect faster, and walk into renewals with numbers that hold up in the room.

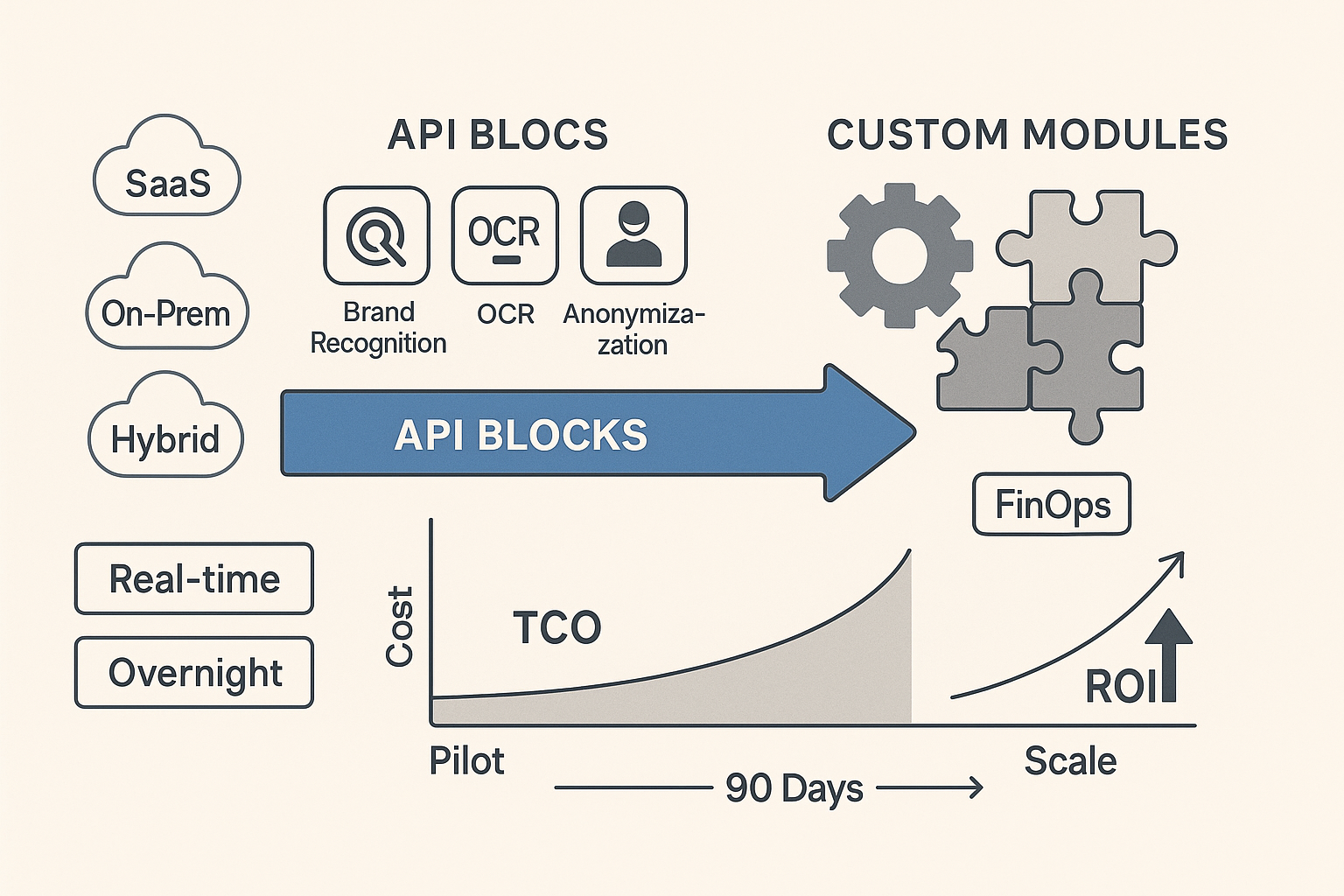

Build Path & Economics — APIs First, Custom Where It Pays Off

C-level framing: the fastest way to get to proof-ready invoices is an API-first build (to stand up core detection and measurement quickly) with targeted customization where your league rules, venues, or workflows are unique. That combination compresses time to value, keeps total cost of ownership (TCO) under control, and leaves room for differentiation.

1) Deployment choices that actually matter

SaaS vs. On-Prem vs. Hybrid.

SaaS accelerates pilots and reduces undifferentiated heavy lifting (infrastructure, upgrades).

On-prem or private cloud is justified for strict data-custody clauses or air-gapped trucks.

Hybrid is often the sweet spot: run detection close to cameras (truck/edge) and push only evidence packs(frames, timecodes, logs) to the cloud for storage, reporting, and billing.

Real-time vs. overnight.

Real-time helps production teams react during the event (e.g., adjust camera plans to hit duration/size thresholds).

Overnight is typically enough for the pre-invoice gate, lowering compute costs while delivering finance the next-day proof.

2) Start with building blocks, then specialize

API building blocks (illustrative):

Brand Recognition API — core logo detection/tracking to derive screen time and share of frame.

Object Detection / Image Labelling APIs — context filters (e.g., “on LED board,” “on jersey”), placement zones, and exclusivity checks.

OCR API — reads scoreboard/overlay text and disclaimer timing to align exposures with moments that matter.

Image Anonymization & Face Detection APIs — privacy-by-design for evidence frames.

NSFW / Alcohol Recognition APIs — suitability screens where contracts restrict content adjacency.

Custom extensions (where it pays off):

League- or venue-specific zone maps and occlusion heuristics (e.g., particular lower-third graphics).

Color fidelity rules using ΔE tolerances that reflect brand palettes under your lighting/HDR pipeline.

A contract-aware rule engine that mirrors your clause language (pass/fail codes, exception routing, retention windows).

Systems integration with rights management and billing so evidence flows straight to invoices.

3) Economics: make TCO predictable

Speed to green: API-first shortens the runway from months to weeks for an audit-ready pilot. You avoid the fixed cost of training and maintaining custom detectors across thousands of marks and venue conditions.

Variable vs. fixed cost: Push bursty compute (event days) to consumption-based cloud where possible; keep steady workloads in reserved capacity.

Cost transparency: Adopt FinOps practices — unified tagging, allocation, anomaly detection — so engineering, finance, and production share a single language for unit costs (e.g., per hour of footage audited, per compliant exposure second) (finops.org).

Avoid re-inventing plumbing: Identity, auth, logging, monitoring, model deployment, and GPU fleet management rarely differentiate your product; buy or reuse.

4) Risk controls baked into the design

Data custody: Evidence frames can be anonymized at the edge; originals never leave the restricted environment unless the contract allows.

Operational resilience: If live checks degrade, the pipeline fails open to batch with a defined SLA so the invoice gate still catches non-compliance before billing.

Model drift watch: Venue changes (new LEDs, HDR) can shift color/clarity — monitor exception rates and auto-flag spikes for review.

5) A pragmatic adoption plan (90 days)

Weeks 1–2: Pilot setup. Connect one event property; wire Brand Recognition API for detection; output size (% of frame) and seconds on screen.

Weeks 3–6: Policy layers. Add color (ΔE), placement zones, clarity score; define pass/fail codes to mirror contract language; integrate anonymization for evidence.

Weeks 7–10: Finance integration. Export pass/fail and evidence packs to your billing or revenue-assurance system; run shadow audits against one cycle.

Weeks 11–12: Rollout decision. Review Compliance Rate, DSO movement, and credit notes avoided; approve expansion to more venues and sponsors.

6) Capability maturity: plan the climb, not just the launch

Use a maturity model to phase investment — start with detection and clause checks, then harden governance, SLOs, and cost controls. The CNCF Cloud-Native Maturity Model is a helpful, vendor-neutral way to align architecture, process, and business outcomes as you scale from pilot to standard practice (maturitymodel.cncf.io).

Executive takeaway

Lead with APIs for speed, invest custom where contracts and venues demand it, and run the program with FinOps discipline so costs track revenue. That path turns compliance from a manual cost center into a scalable, provable capability that protects margin and strengthens renewal pricing.

Conclusion — Make Compliance a Real-Time Capability

If sponsors pay for size, color, duration, placement, then the organization must pay equal attention to proof. The fastest path is to run compliance as a pre-invoice gate — automated, clause-aware, and backed by evidence — so revenue is protected before it ever reaches Accounts Receivable.

What changes when you do this well

Disputes collapse to exceptions. You stop debating subjective “exposure” and start sharing pass/fail with evidence (timecodes, frames, reason codes). Finance issues fewer credits, legal spends less time on make-goods, and production receives precise feedback loops.

Cash accelerates. Invoices ship with proof; DSO shrinks because sponsors have less to question.

Pricing power improves. Standardized screen time, vSOV, and quality-weighted exposure turn renewals into data-driven negotiations — not opinion contests.

Risk is governed, not assumed. Versioned rules, privacy controls, and auditable logs make compliance defensiblewith partners and auditors.

A pragmatic next step for leaders (single-property pilot)

Pick one property + one sponsor. Translate the contract into explicit thresholds: minimum % of frame, continuous seconds, ΔE color tolerance, approved zones, and minimum clarity. Anchor the “opportunity-to-see” mindset to familiar viewability definitions to keep stakeholders aligned.

Stand up core blocks quickly. Use a Brand Recognition API to measure size and duration; add OCR (for overlays/boards) and image anonymization (privacy) to produce shareable evidence packs.

Wire the finance gate. Only compliant exposures (or approved exceptions) flow to billing.

Measure three outcomes. Compliance Rate, credit notes avoided ($), and DSO movement. If these move in the right direction on a single cycle, you have a board-ready case to expand.

Build with speed, scale with discipline

APIs first, custom where it pays off. Ready-to-use APIs shorten time-to-value; tailor color/zone/clarity policies and league-specific rules as you scale.

Cost visibility from day one. Treat the pipeline like any cloud workload: adopt FinOps practices (unit economics, allocation, anomaly detection) so engineering, finance, and production share a single language for cost vs. value.

The leadership call

Move compliance upstream. Make it continuous during events (for production) and definitive before invoicing (for finance). With a clause-aware bot at the center — and a modular stack that includes logo detection, OCR, anonymization, and a contract-aware rule layer — you turn sponsorship from “we think it ran” into “here is what ran, proven.” That shift doesn’t just protect margin; it wins renewals and sets a higher standard your competitors will struggle to match.