Green Manufacturing Scores via Defect-Free Vision

Introduction – Why Zero-Defect Lines Are the New ESG Superpower

Manufacturing has always aimed for quality, but today, the stakes are higher than ever. In a world where sustainability goals and environmental reporting are just as important as profit margins, every defective product carries more than just a cost — it carries a carbon footprint. For companies focused on ESG (Environmental, Social, and Governance) performance, avoiding defects means more than reducing rework; it means cutting waste, saving energy, and protecting brand credibility.

Traditionally, production flaws were seen as unavoidable — just part of the process. But with growing pressure to reduce CO₂ emissions and material usage, that mindset is shifting. Modern manufacturing leaders are realizing that every scrap part, every rejected batch, and every late-stage fix burns more electricity, consumes more raw materials, and pushes sustainability targets further out of reach. In this new landscape, defect prevention isn’t just an efficiency play — it’s a climate strategy.

Here’s where computer vision enters the scene. With today’s powerful AI models, even subtle visual defects can be detected automatically, in real time, as products move down the line. Combined with affordable high-speed cameras and cloud-based APIs, visual inspection systems can now catch issues earlier than ever — before flawed items waste more resources downstream.

This isn’t science fiction. Thanks to advancements in cloud image processing, even mid-sized factories can deploy AI-powered inspection without heavy upfront investments. In many cases, existing cameras and systems can be upgraded with API-based intelligence, allowing teams to identify surface cracks, misalignments, contamination, or missing components instantly — long before they leave the production floor.

In this blog post, we’ll explore how defect detection powered by vision AI can help factories improve their sustainability metrics while also cutting operational costs. You’ll learn how these systems work, how they’re deployed, and why reducing defects is one of the smartest and greenest moves any manufacturer can make right now.

The Hidden Carbon Cost of Scrap & Rework

When we think about manufacturing waste, we often imagine piles of discarded parts or materials. But what’s harder to see — yet far more damaging over time — is the invisible energy and carbon tied to every flawed product that rolls off the line. Each defective unit isn’t just a lost sale or a delay; it’s a drain on your factory’s sustainability performance.

Let’s break it down.

Energy Gets Burned Twice

Producing a defective part uses the same amount of electricity, heat, and machine time as a perfect one. If that part is scrapped or reworked, you burn even more energy to either fix or replace it. That means you’re doubling your consumption for a single product — and doubling the emissions that go with it. In high-speed lines where defects go undetected for hours, this adds up fast.

For example, forging a small metal component may take only seconds, but the process involves high-temperature furnaces, powerful presses, and cooling systems — all of which draw significant power. If the part is found to be flawed after finishing, you’ve spent all that energy for nothing, and you’ll spend even more to make it again.

Raw Materials Are Lost

Every flawed product also means wasted materials — steel, aluminum, plastics, coatings, and adhesives that can't be recovered easily. Worse, some materials (especially composites or treated surfaces) are not recyclable, ending up in landfills. Even when recycling is possible, the remelting or grinding process requires more energy and introduces additional emissions.

And if your production involves rare or regulated materials, like specialty alloys or chemicals, the environmental footprint of scrap becomes even heavier due to the complexity and distance of their supply chains.

Upstream & Downstream Waste

The carbon impact doesn’t stop at your factory walls. A defective item inflates Scope 3 emissions — the indirect emissions from suppliers and distributors. For instance:

Suppliers used electricity and fuel to mine, refine, and ship raw materials that were eventually scrapped.

Transport fleets might carry faulty batches to warehouses or customers, only to return them later, increasing fuel use and emissions.

This ripple effect makes defect rates a key metric not just for operations, but for ESG audits and sustainability reports.

It Adds Up Quickly

Even a small defect rate can translate into large losses. A factory producing 1 million units per year with a 3% defect rate wastes 30,000 units annually. Multiply that by energy costs, material inputs, and rework hours, and you’re looking at a major drain — both environmentally and financially.

In sectors like automotive, aerospace, or electronics — where precision is everything — the carbon cost of just one bad batch can be huge. And in a time when carbon disclosures are becoming mandatory in many regions, every scrap bin becomes a liability.

By targeting defects early with smart, automated inspection systems, manufacturers can prevent this waste before it happens. Not only does that make the line more efficient, it helps reduce emissions and resource use in a measurable, reportable way — a win for both the bottom line and the climate.

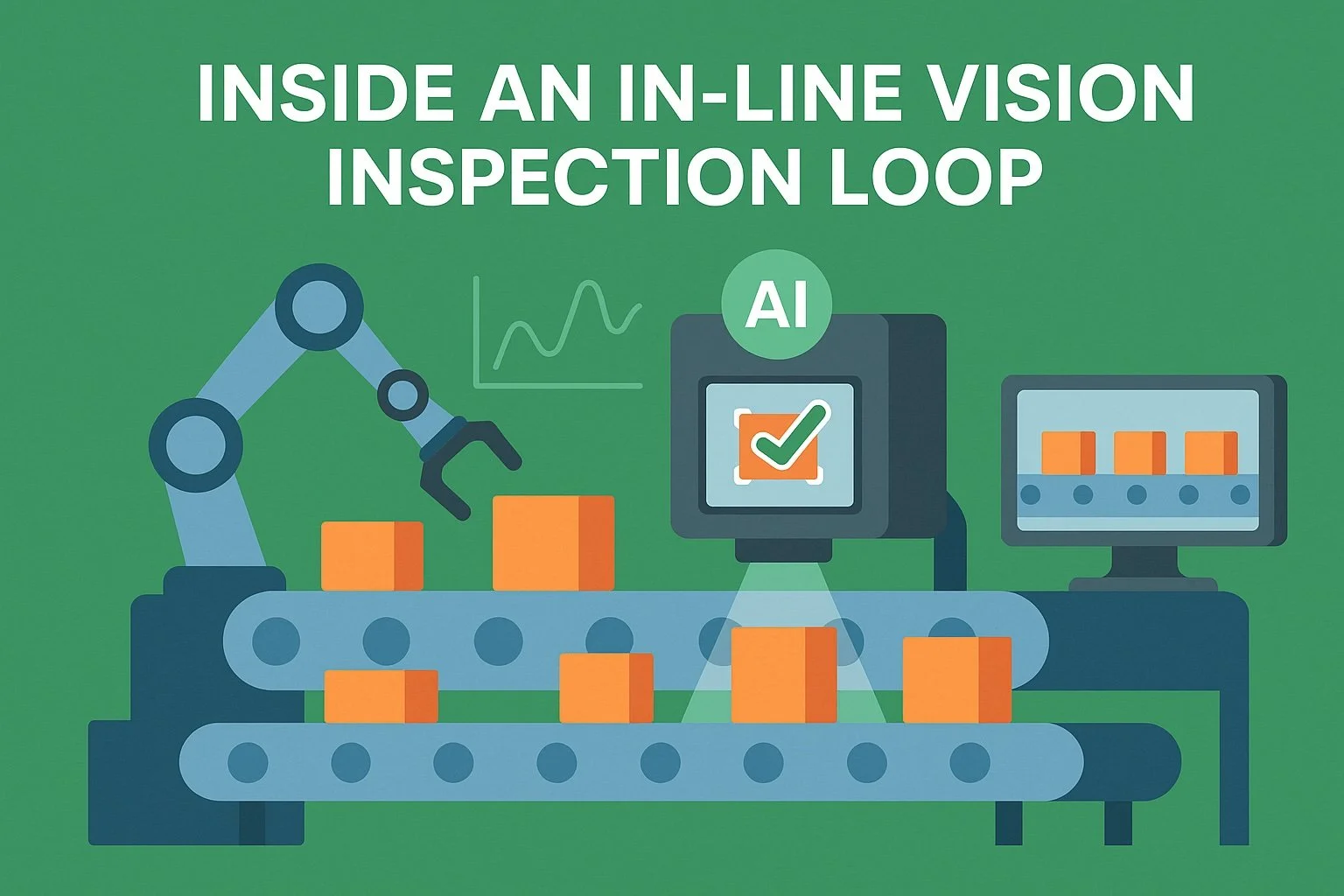

Inside an In-Line Vision Inspection Loop

To cut down on defects and reduce environmental impact, manufacturers are turning to in-line vision inspection systems — automated setups that watch every product as it moves through the production line. These systems use cameras and AI to detect problems the moment they appear, allowing teams to react fast, reduce waste, and keep quality high.

Let’s look at how it works step by step.

Camera Checkpoints Along the Line

An effective vision inspection system doesn’t rely on just one camera at the end of the line. Instead, cameras are placed at multiple points during production to catch issues as early as possible. For example:

Entry Checkpoint: Verifies raw materials or partially assembled components are correct before they enter the main process.

Mid-Process Checkpoint: Catches defects during key assembly or transformation steps, like welding, coating, or molding.

Final Checkpoint: Performs a full scan before packaging to make sure the product is clean, complete, and defect-free.

Each checkpoint provides a real-time “snapshot” of product quality at that stage, minimizing how far flawed parts travel before being caught.

The Vision-AI Workflow

Here’s what happens behind the scenes during inspection:

Image Capture – High-resolution cameras take pictures or video of the moving product, often under controlled lighting.

Preprocessing – Basic image adjustments like noise reduction, contrast enhancement, or edge sharpening are applied (often at the edge).

AI Inference – The image is sent to a trained AI model — usually running in the cloud or at the edge — that looks for flaws like cracks, smudges, missing parts, or misalignments.

Feedback Loop – If a defect is found, the system can trigger actions instantly: alerting operators, stopping the line, or sending the item to a rework station.

The entire process — from image to decision — typically happens in under 500 milliseconds. That’s fast enough to keep up with high-speed conveyor belts without causing delays.

Different Models for Different Defects

Vision AI isn’t one-size-fits-all. Depending on what needs checking, different model types are used:

Image Classification Models: Decide if an item is “OK” or “Defective” based on its overall look. Great for obvious flaws or binary checks.

Object Detection Models: Locate specific issues within the image — like a missing screw, a misaligned panel, or a wrong part.

Segmentation Models: Highlight exactly where a surface defect (like a scratch or dent) is located, down to the pixel.

OCR (Optical Character Recognition): Reads printed or laser-etched codes to verify batch numbers, expiration dates, or serials.

Cloud-based APIs from platforms like API4AI offer many of these models as ready-to-use endpoints. This means factories can start detecting flaws without building complex AI infrastructure from scratch.

Speed and Precision Matter

To be effective, the inspection loop must be fast, accurate, and easy to integrate. Downtime is expensive, so any delay in identifying problems must be avoided. That’s why many systems aim for ultra-low latency — processing each image and delivering a decision in less than half a second.

Precision is also key. False positives (calling a good product bad) cause unnecessary rework, while false negatives (missing a real defect) allow flawed items to slip through. Well-trained AI models, especially those fine-tuned on a company’s real product images, can strike the right balance.

By combining smart camera placement, fast cloud APIs, and AI-powered defect recognition, in-line vision inspection loops create a proactive quality control system. Instead of reacting to bad outcomes, manufacturers can prevent them entirely — saving resources, reducing emissions, and keeping production lines lean and sustainable.

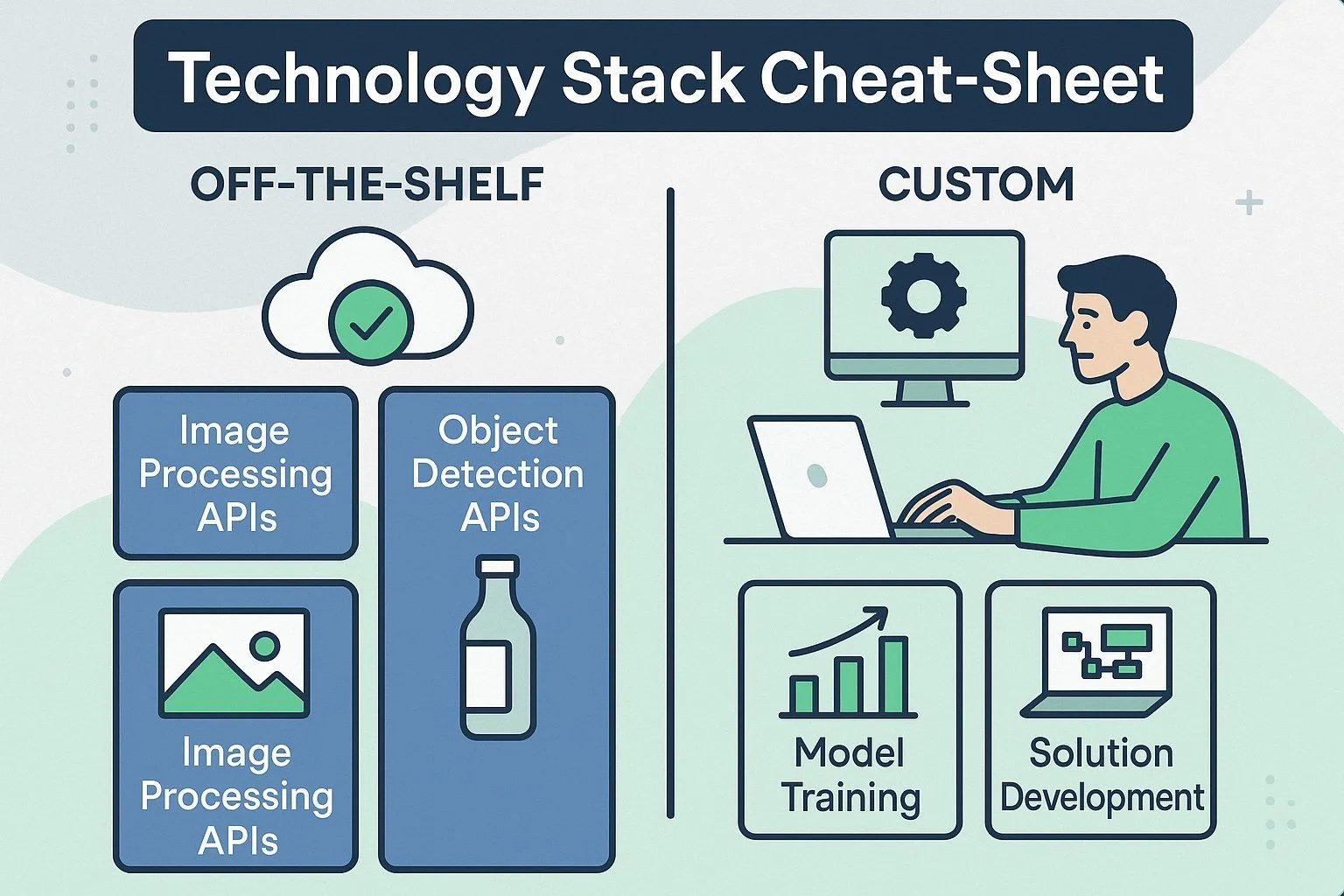

Technology Stack Cheat-Sheet (Off-the-Shelf + Custom)

Implementing an AI-powered vision inspection system doesn’t have to start with building everything from scratch. In fact, one of the biggest advantages of modern manufacturing tech is how plug-and-play it has become. With a smart combination of off-the-shelf APIs, modular tools, and targeted customization, even a small team can roll out a powerful defect-detection system in weeks, not months.

Let’s explore the key components of a typical setup — and where ready-made tools like cloud APIs fit in.

Start Fast with Ready-to-Use Vision APIs

For many common inspection tasks, off-the-shelf APIs are all you need to get started. These are pre-trained models hosted in the cloud, designed to handle popular use cases like surface flaw detection, object presence, or label verification. Platforms like API4AI offer a range of such services, including:

Object Detection API – Identify missing, misplaced, or extra parts in assemblies.

Image Labeling API – Categorize images or flag visually different items (e.g., wrong product types or packaging).

Defect Detection APIs – Spot scratches, dents, smudges, or shape deformities on surfaces.

Background Removal API – Isolate products from distracting backgrounds or fixtures for clearer analysis.

OCR API – Read serial numbers, QR codes, or expiration dates printed on components or packaging.

These APIs can be called directly from the production line’s camera system. All it takes is capturing an image and sending it over to the API; the response returns a defect score, label, or bounding box — ready for use in your quality control logic.

Add Special Functions with Niche Models

Some manufacturing use cases require more specialized capabilities. Luckily, many APIs built for other domains can be repurposed creatively:

NSFW or Logo Recognition APIs – Originally designed for content moderation or brand detection, these can be used to flag foreign objects, contamination, or misplaced branding.

Alcohol or Wine Label Recognition – Useful in food or beverage manufacturing for validating that the correct label is on the correct bottle type.

Car Background Removal API – Can be used outside the automotive industry for quickly segmenting complex objects from a busy environment.

This flexibility means you can mix and match vision tools to suit your exact needs, without developing custom AI models for every tiny task.

When to Go Custom

Off-the-shelf models are great for rapid deployment, but they have limits. You’ll need custom AI models if:

Your products are highly unique or visually complex.

The defects you’re catching are subtle (like micro-cracks or faint discoloration).

You use rare materials or manufacturing methods that don’t appear in public datasets.

You want to combine multiple sensor inputs — like infrared, thermal, or 3D imaging — with visual inspection.

In these cases, working with a vision AI provider to build and train a tailored model using your own images is the best path forward. This often results in better accuracy and fewer false positives or negatives.

Cloud vs. Edge vs. Hybrid: Choosing Your Deployment Style

How your inspection AI runs depends on your setup and priorities:

Cloud Inference – Easy to deploy, scalable, and powerful. Great when you have a stable internet connection and don’t mind a short delay (usually under 500 ms).

Edge Inference – Run the AI model directly on a local device (like a GPU-enabled computer or smart camera). Ideal for ultra-fast decision-making, low-latency environments, or when internet access is limited.

Hybrid Models – Use edge devices to pre-process images and send only important data to the cloud. Balances performance and bandwidth.

API4AI supports both cloud and containerized deployment options, allowing manufacturers to integrate AI where it fits best.

By combining off-the-shelf APIs, creative repurposing of existing models, and strategic customization, manufacturers can build robust and scalable vision systems. This modular, flexible approach keeps costs down, shortens rollout time, and gives teams full control over how they monitor and eliminate defects — paving the way for cleaner, greener, and smarter production.

Green KPIs & Board-Friendly ROI

When manufacturers think about reducing defects, the first benefit that usually comes to mind is cost savings. But today’s smart factories have more than just efficiency goals — they’re also under pressure to meet sustainability targets. Fortunately, AI-powered vision inspection helps with both. By cutting down on scrap and rework, companies not only lower production costs but also improve the green KPIs they report to executives, shareholders, and regulators.

Let’s take a closer look at the key performance indicators (KPIs) that matter and how defect detection connects directly to them.

Defect Rate ↓, First-Pass Yield ↑

These are classic metrics in quality control, and they become even more powerful when viewed through a sustainability lens:

Defect Rate: The percentage of products that don’t meet quality standards on the first try. Lowering this rate means less material is wasted, fewer products are reworked, and less energy is consumed overall.

First-Pass Yield (FPY): The percentage of products that meet all quality standards without needing rework. A higher FPY means your line is running efficiently and cleanly — with fewer interruptions and less resource use.

Even a small improvement in FPY can lead to major savings over time, especially in high-volume production lines.

Energy & CO₂ Per Good Unit

Every manufacturing company is now expected to track its carbon footprint, often broken down by:

kWh per unit produced

CO₂ equivalent (CO₂e) per unit shipped

By using AI to prevent defective products early, the factory avoids wasting electricity, gas, water, or compressed air on items that won’t make it out the door. This directly reduces the energy and emissions required to produce each good unit. For example:

If your factory uses 10 kWh per product and has a 5% scrap rate, you’re wasting 0.5 kWh per finished product. Improving that scrap rate to 1% saves 0.4 kWh per unit — multiplied across thousands or millions of products per year.

Vision systems help track and document these improvements clearly, making it easier to show regulators, auditors, or ESG investors how your operations are becoming greener.

Material Usage Efficiency

Another measurable win: reduced raw material usage. When fewer parts are rejected, you use less of everything — metals, plastics, coatings, adhesives, and even packaging. This reduces:

Your purchasing costs

Your environmental footprint (especially from high-impact materials like aluminum or rare alloys)

Your waste disposal and recycling loads

These reductions can be reported in tons of material saved per year or cost per good unit, both of which matter to CFOs and sustainability teams alike.

Fewer Rush Shipments & Lower Inventory Overhead

Defective products often lead to delays and last-minute shipments to meet customer deadlines. These emergency logistics moves — like air freight or express delivery — burn more fuel and cost more money.

With fewer defects:

Delivery schedules stay on track

Standard (and greener) shipping methods can be used

Less inventory padding is needed to buffer against rejects

That translates into fewer emissions and smoother operations across the board.

ROI That Speaks to Everyone

For the operations team, vision inspection means fewer bottlenecks and less downtime. For the finance team, it means lower cost per unit. And for sustainability leaders, it means real, measurable progress toward environmental goals.

What makes AI-powered defect detection especially attractive is that its payback period is short. Many companies see ROI in months, not years — because it reduces waste, avoids product recalls, and lowers energy bills almost immediately.

In short, vision-based inspection isn’t just a technical upgrade — it’s a business case and a sustainability case in one. And the numbers are easy to track, share, and celebrate across departments. Whether you’re reporting to your plant manager or presenting to the board, defect-free production backed by smart AI delivers the kind of KPIs everyone wants to see.

Deployment Playbook – From Pilot Cell to Plant-Wide Roll-Out

Rolling out AI-powered vision inspection doesn’t have to be complicated or risky. With today’s cloud APIs, flexible camera setups, and low-code integrations, manufacturers can start small, test quickly, and scale confidently. The smartest approach is to treat deployment like a product launch: start with a pilot, prove the value, then expand.

Here’s a step-by-step playbook to guide you through the process.

Step 1: Run a 30-Day Vision Sprint

Begin with a single pilot cell — a small, well-understood part of your production line where defects are easy to spot and already tracked manually. The goal isn’t perfection, but speed and proof of value.

What to do:

Mount cameras: Set up one or more high-resolution cameras at key inspection points.

Connect to cloud APIs: Use ready-to-go tools like API4AI’s Object Detection or Image Labeling API to process images in real time.

Stream images: Automatically send captured images to the cloud and collect defect predictions.

Compare results: Benchmark the AI's detection accuracy against what human inspectors or QC reports show.

You’ll likely discover that even a basic setup can catch issues earlier — and more consistently — than human-only checks.

Step 2: Curate and Annotate Real-World Data

To improve accuracy over time, start collecting a dataset of real production images, both good and bad. This is key if you later want to move from generic APIs to a custom-trained model.

Best practices:

Tag edge cases: Include hard-to-spot defects, unusual lighting conditions, and damaged parts that sometimes slip through.

Organize by defect type: This helps train smarter AI later and makes quality trends more visible.

Involve operators: Let frontline workers flag missed defects or false positives — they’re your best early-warning system.

Step 3: Integrate Alerts into Existing Systems

You don’t need to rip out existing control systems. Most vision pipelines can plug into your current MES, SCADA, or quality dashboards using webhooks, REST APIs, or middleware.

Integration ideas:

Show defect alerts on operator screens or mobile devices.

Trigger line stops or diverters automatically for high-confidence defects.

Store inspection data in your plant database or send it to your ERP for traceability.

With lightweight integration, your quality teams stay informed without adding complexity to their daily routines.

Step 4: Scale with Confidence

Once the pilot proves its value, you can begin scaling across the plant. Each new camera or checkpoint can follow the same basic playbook. As you scale:

Use containerized models: Deploy AI locally on edge devices using Docker or similar tools when low latency is critical.

Run A/B testing: Try different model versions to see which catches more defects without over-alerting.

Create a central monitoring hub: Let engineers and managers view inspection results, failure trends, and KPIs from a single dashboard.

Scaling doesn’t mean complexity. A modular system of cameras + APIs + alert logic can be rolled out in stages — line by line or shift by shift.

Step 5: Keep the Feedback Loop Alive

Vision systems get smarter with time — if you feed them the right data. Continue updating your image library and retraining your models (if customized) every few months. You’ll catch more defects, reduce false alarms, and respond to new issues faster.

Pro tip: Even off-the-shelf APIs often allow fine-tuning with your own data. Reach out to providers like API4AI for guidance on hybrid models — pre-trained with the option to specialize.

With this approach, you don’t just install a vision system — you build a living, learning quality tool that adapts to your products and improves your sustainability score with every inspection. Start small, stay focused, and let the wins build momentum. A smarter, greener factory is closer than you think.

Conclusion – Sustainability That Pays for Itself

In manufacturing, reducing defects has always been a sign of good process control. But today, it’s something more — it’s a direct path to sustainability and competitive advantage. Every time a flaw is caught early, a product is saved from becoming waste, and energy, materials, and money are preserved. Vision AI turns this into a repeatable, scalable process, helping factories meet both their quality and environmental goals.

Throughout this post, we’ve shown how AI-powered visual inspection helps:

Cut down scrap and rework

Lower energy usage per unit

Save raw materials and packaging

Improve carbon metrics and ESG reports

Reduce costs in logistics, labor, and warranty claims

And all of this can be done without overhauling your factory. Thanks to modern cloud APIs and AI toolkits, even small and mid-sized manufacturers can deploy smart inspection systems using existing cameras and infrastructure. Off-the-shelf tools like object detection, image labeling, OCR, and defect recognition APIs — available from platforms like API4AI — make it fast to start and simple to scale.

For specialized needs, custom-trained models offer even more precision. And whether you choose cloud-based or edge deployment, the flexibility is there to match your factory’s speed, bandwidth, and security requirements.

In the end, defect-free production isn’t just a dream — it’s a practical step toward leaner operations, greener metrics, and a stronger brand reputation. The tools are ready. The ROI is clear. And the climate benefit is real.

So whether you’re chasing cost reduction, carbon neutrality, or both, vision AI is one of the smartest investments you can make on the path to a cleaner, more efficient future.